The IBM System/360 was a very influential computer; after it adopted an 8-bit character code, EBCDIC, many computers were influenced by it to a greater or lesser extent.

The DDP-16 and its descendants, such as the Honeywell 316, and the Hewlett-Packard 2115 and related minicomputers still had an old-style minicomputer architecture, not unlike that of the Digital Equipment Corporation's 18-bit PDP-4; the influence of the System/360 was limited to their choice of a 16-bit word length, which was the most common word length for a minicomputer.

The PDP-11 was a 16-bit computer that had instructions which were the same lengths as those of the System/360, and which were somewhat similar in capability, and it was byte-addressed instead of word-addressed, and, as well, its design strove to maintain a consistent ordering of bytes within and across words. But it was in no sense a copy of the 360, despite the influence.

The Sigma series of computers from Scientific Data Systems had a 32-bit word length, and were designed to closely match the System/360 in data formats, in the character code used, and in the capabilities of their instruction set. But the instructions were all 32 bits long, following the design principles of the computers that preceded the System/360.

And there were computers that simply copied the instruction set of the System/360; first, the RCA Spectra series of computers, then the Univac 9000 series, and later plug-compatible computers which could even run IBM operating systems and connect to IBM peripherals.

There was also the Interdata series of minicomputers, which began by implementing a 16-bit subset of the System/360 instruction set, but which went in a different direction when the architecture was extended to 32 bits.

But IBM was influential before the System/360 as well. The IBM 704 and its successors, including the IBM 7090, with 6-bit characters and a 36-bit word appear to have very deeply influenced nearly every computer made before 1964.

Every IBM 704 instruction had a three-letter assembler mnemonic, and this was true of many other computers, even the much smaller PDP-8.

Given what computers were usually used for, it did not seem as if there was a need for them to handle lower-case letters - and available punched-card equipment wouldn't have handled them anyways, and punched cards were often used with computers of all kinds, even if many computers often went without a punched card capability.

So word lengths were usually multiples of 6 bits, and computers that used ASCII often stored text allocating only 6 bits per character, whenever control characters would not be used. Often, such computers would use upper-case only teletypewriters as input-output devices as well.

The IBM 7090 had an address space of 32,768 words, each 36 bits in length, and the designers of many other computers felt that if this was good enough for IBM's giant and powerful mainframes, it surely would be adequate for their machines as well.

The PDP-8 and a few other computers had a word length of 12 bits.

The word length of 18 bits, half of 36 bits, was quite common. It was used on the PDP-4, and its compatible successors, the PDP-7, PDP-9, and PDP-15. But other manufacturers used it as well.

And the Control Data 1604 was constructed to go IBM one better, having a word length of 48 bits.

Each instruction in the PDP-8 was 12 bits long; the memory-reference instructions could refer either to the first 128 locations in memory, or the group of 128 locations in which the instruction was located. Different pages of 128 locations could, therefore, exchange data through the first page.

But the PDP-8 had another way to get around that limitation; instructions could specify indirect addressing, which let an instruction use all twelve bits of another location in memory as its address.

Because 4,096 locations might not be good enough, however, an option was made to allow the computer to specify a data field and an instruction field from among eight fields of 4k words, so that the computer could use a total memory of 32k words.

So even the lowly PDP-8 strove to achieve parity with the 7090 in this respect.

Another, more trivial, way in which the influence of the IBM 704 was manifest was that, on the front panel of the IBM 704, you could read the machine's full official designation: IBM Electronic Data Processing Machine Type 704.

However, IBM didn't go around advertising the machine as the EDPM-704.

But compare the names of many other computers in that period...

Hmm. Only three examples? I had thought there were several more.

One reason that I called attention to the fact that the maximum memory size of the 704 was 32,768 words, and several other computers followed its lead, is that while the IBM 7090 had space for a number of special-purpose fields in its instruction words, a 24-bit instruction format was very well adapted to serving a memory that was 32,768 words in length.

A typical 24-bit computer could have an instruction format like this:

So if one's goal was to build a computer that was smaller, and hence much cheaper, than an IBM 7090, and yet was at least almost as good, a 24-bit word length would have seemed like a very attractive choice back then.

And it was. Quite a few manufacturers made computers with a 24-bit word length. On this page, I will examine some of those computers; there is also another page on this site which attempts to discuss a more comprehensive selection of 24-bit computers from the viewpoint of going into some detail about their architecture and design.

On this page, each of the few computers I discuss will get a brief description, but there will also be pictures to let you see what they looked like. Except for one machine with a very interesting unique feature, the machines discussed here will be the most typical members of this group of computers, and they will all be from the United States.

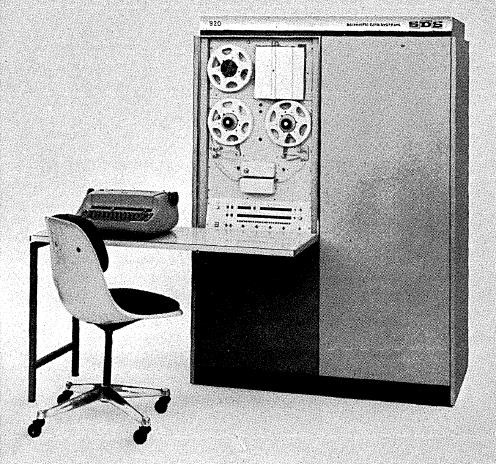

The first group of machines to be discussed here began with the SDS 910 and SDS 920, for which I have seen an advertisement in the July 1962 issue of Datamatiion. This advertisement noted that these computers were both powerful and inexpensive, disproving the "erroneous cliché - that the cost per computed answer is inversely proportional to the size of the computer", which implies that a formulation of Grosch's Law was already in existence, although that claim - that the cost of a computer is proportional to the square root of its size - is said to have been derived from the pricing of IBM's later System/360 lineup.

The format of its instruction words was:

A later member of this series, the SDS 940, included additional features to facilitate time-sharing; a less expensive model with this feature, the SDS 945, was later made.

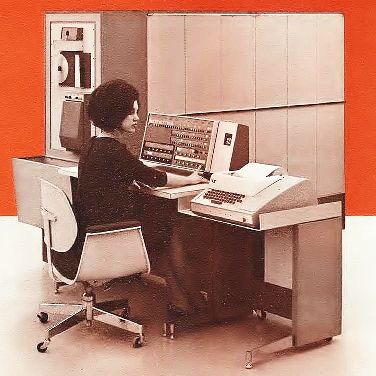

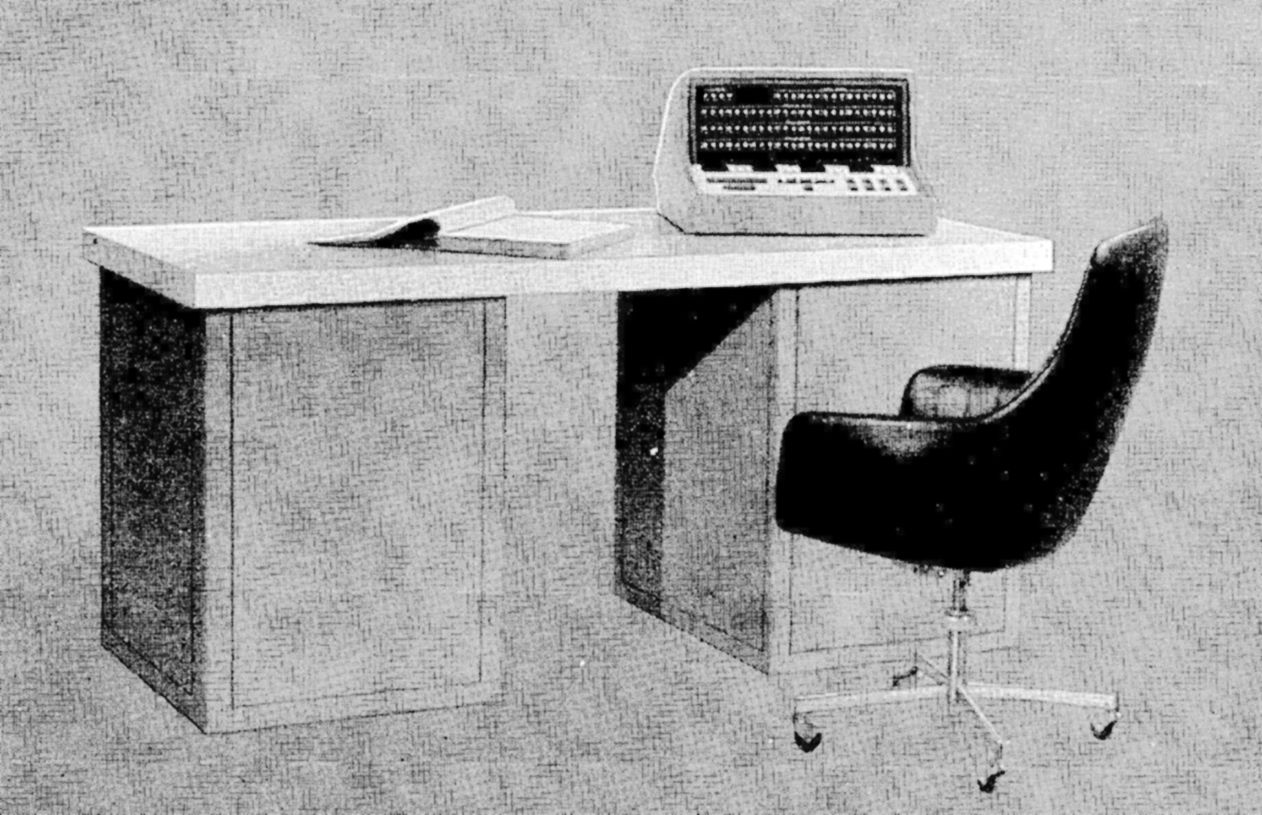

Pictured at left (in a rather small image, I admit) is the SDS 930 computer. This larger member of the series followed the initial introduction of the 910 and 920. Later on, a less expensive version of it, the SDS 925, was made. That, and the SDS 940, and a less expensive version of that, the SDS 945, were all quite similar in appearance to the SDS 930.

Both normal and double-precision floating-point numbers on these machines occupied 48 bits, with the other bits in the word containing the exponent being unused in the case of a single-precision floating-point number.

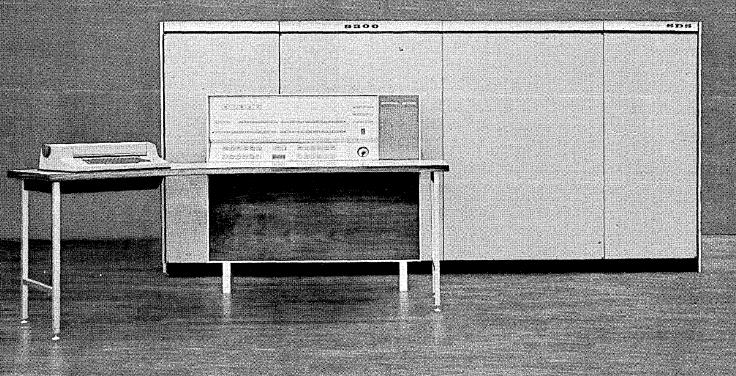

Scientific Data Systems also made a very similar machine which was larger in size, but not fully compatible, the SDS 9300. It was specifically made to satisfy the terms of a defense contract, which led to it being designed without compromising power for the sake of compatibility. It is pictured below:

The format of its instructions precisely followed the example instruction format I gave above as typical of a 24-bit computer:

One facility of note that this computer offered was a Twin Multiply instruction, that simultaneously and independently multiplied the two 12-bit halves of the accumulator with the two 12-bit halves of the memory operand.

Floating-point hardware was optional. The computer had instructions to perform 48-bit addition and subtraction.

On this machine, single precision floating-point numbers used all the bits of a 48-bit number, and had the same format as double precision floating-point numbers on the 920 and compatible machines; double-precision numbers occupied three words, thus being 72 bits long.

Another interesting thing about this computer is that it had an Interpret (INT) instruction in addition to an Execute (EXU) instruction. The Interpret instruction did not perform any operations based on the instruction which was located at its effective address, but it loaded index register 2, of the computer's three index registers, numbered 1 to 3, with the contents of the instruction's opcode field.

And, in addition, it skipped the next instruction if the target instruction had a 1 in the first bit of its two-bit index register field.

The SDS 900 series computers only had one index register, so the field in an instruction that indicated indexing was only one bit long. In addition, instructions contained a bit to indicate that an instruction was a "Programmed Operation" (POP) which meant that it would normally invoke a subroutine which would decode the programmed operation according to the computer's instruction format, and then perform some function requiring multiple instructions.

The Interpret instruction of the SDS 9300, at the cost of introducing significant overhead for every instruction, allowed programs written with programmed operations to run on the 9300. This facility was, in fact, used for one important purpose: the logic manual for the 9300's FORTRAN compiler notes that this compiler made extensive use of programmed operations - despite the 9300, unlike the computers of the 900 series, not actually having that feature.

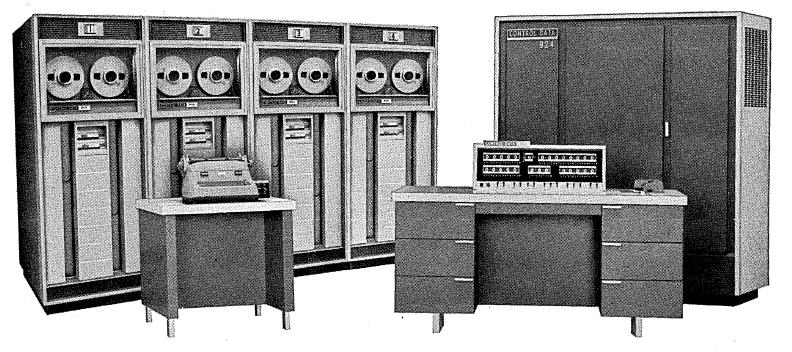

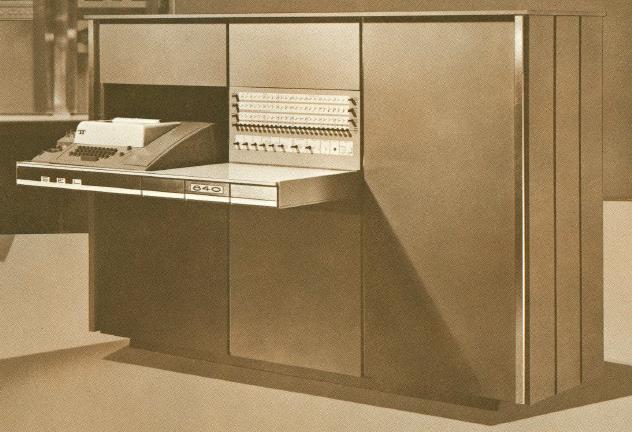

Introduced about the same time as the SDS 910 and 920, but perhaps slightly later, was the Control Data 924. When it was introduced, Control Data was already offering its 48-bit 1604 computer and its 12-bit 160 computer. A picture is below:

Its instruction format was as follows:

Note that while it increased the number of index registers by using a three-bit index field, instead of dispensing with indirect addressing entirely, it simply dispensed with the ability to combine indirection and indexing.

Apparently, while the other 24-bit computers described here sought to approach the IBM 7090 in power, this one aspired to approach the IBM 7094 instead!

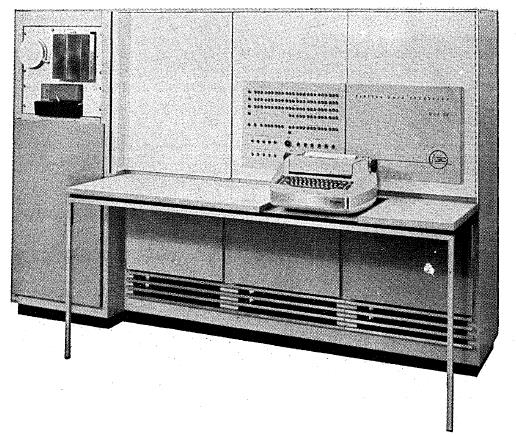

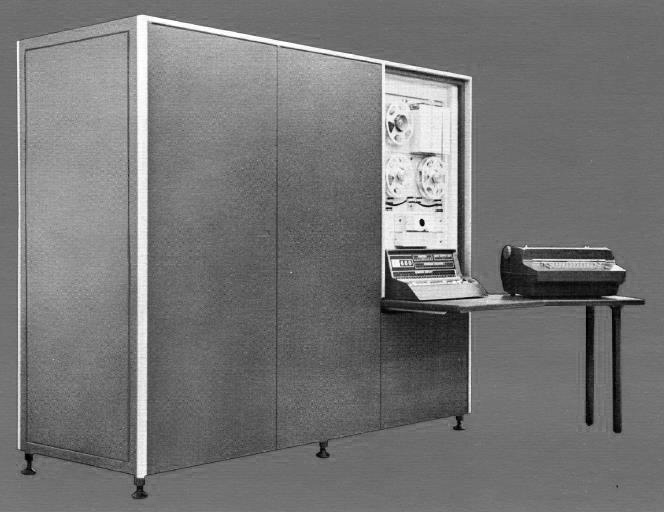

The DDP-24 computer was introduced in March, 1963 by the Computer Control Company. It is illustrated below:

The DDP-19 computer also made by this company, with a 19-bit word, had a very similar appearance.

This computer also had the typical layout of a 24-bit instruction word that I gave as an initial example, and which we saw was actually used by the SDS 9300, but expanding the number of index registers from one to three was an option, so the first bit of the index register field could be unused.

The manual also specifically noted this was true of the address field as well, but other 24-bit machines could be ordered with memory sizes smaller than their maximum as well, so this was hardly a unique characteristic.

The DDP-24 showed the influence of the IBM 7090 computer in another way: instead of representing integers in two's complement format, it used sign-magnitude representation.

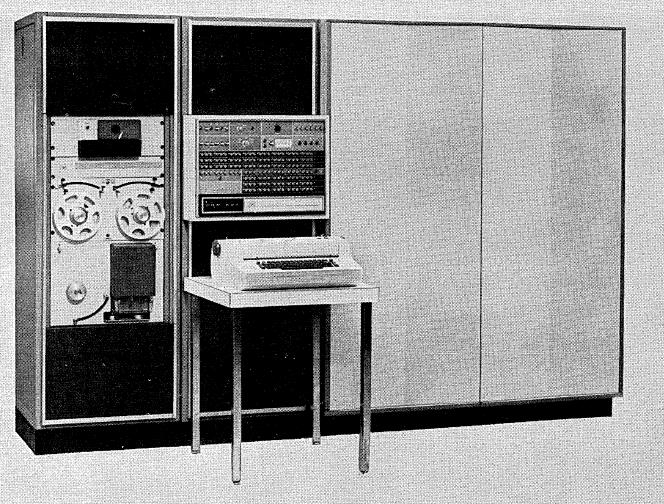

The later DDP-224 had a more striking front panel:

And then the DDP-124, pictured at right, used a style of front panel that would also be used on the Honeywell DDP-516, using four square buttons to choose the register to be displayed, and a row of round illuminated buttons to serve both as front panel lights and front panel switches at the same time, thus making the front panel as compact as possible.

Just as the SDS 910, 920, and 930 were compatible computers of increasing performance, General Electric made the 20-bit GE-215, 225, and 235, which were compatible, but of increasing performance. In late 1963, they announced a new series of more powerful computers; the GE-425, 435, 455, and 465. The successive members of that series were each billed as 80% more powerful than the ones preceding them, thus offering a wide range of performance before the IBM System/360, to be announced in April of 1964, would do so.

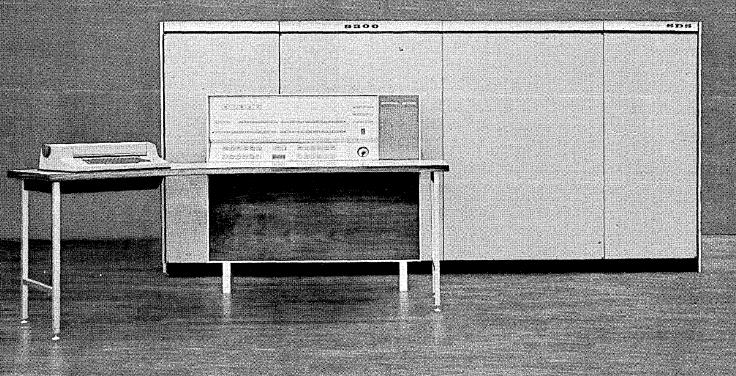

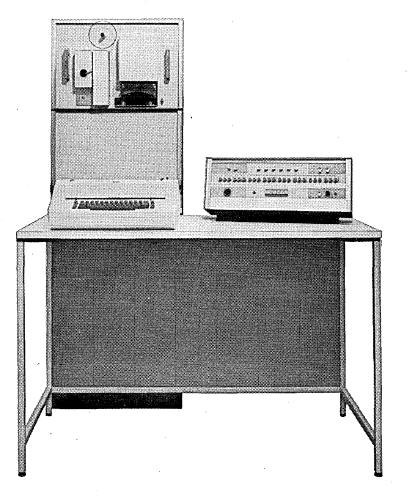

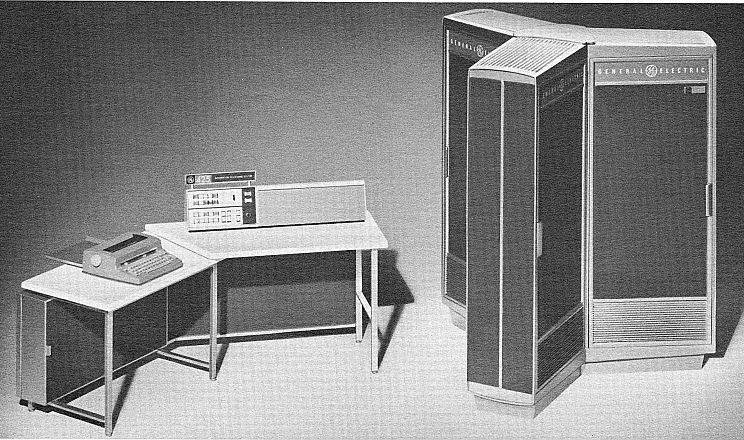

The GE-425 is pictured below, with its control panel on the left, and the CPU on the right:

And this image, a portion of an advertisement for the entire series,

shows the CPUs of the 425, 435, 455, and 465 respectively from left to right.

These computers had a three-bit index register field, but only six index registers. As with the Control Data 924, the value 7 performed a special function. Instead, however, of simply indicating indirect addressing, as on the 924, it caused the instruction to be expanded to 48 bits in length. The second word of the instruction included a pointer to a memory location to be used instead of an index register; multiple addressing modes were also available for that, and indirect addressing was one of the possible results that could be achieved through the use of an instruction word in this format.

In general, the 24-bit computers discussed here were very conventional examples of 24-bit computers. However, the next one to be discussed was instead very special.

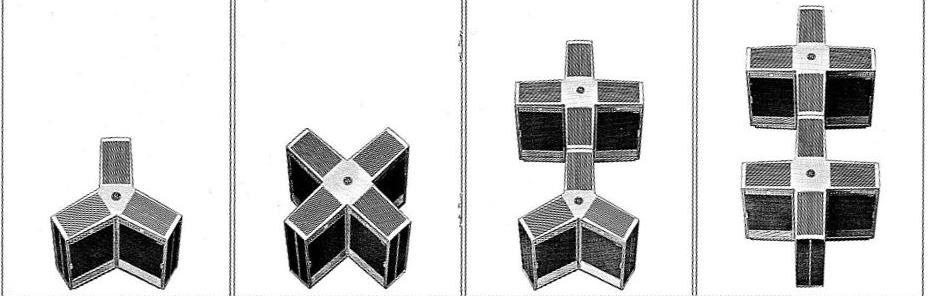

The Packard-Bell 440 computer, shown at right, first installed in 1964, used a magnetic memory based on a modified form of core with non-destructive readout made by the Aeronutronics division of Ford, BIAX (for bi-axial). These magnetic cores, rather than looking like doughnuts with a square profile, were rectangular prisms with holes in them.

Its main memory had an access time of 2 microseconds and a cycle time of 5 microseconds; the fast memory had an access time of less than a microsecond.

The machine could have from one to seven modules containing 4,096 words of regular core memory, with the last 4K of address space being reserved to contain from one to eight modules containing 256 words of fast memory.

The fast memory was primarily intended for microprograms, but microprograms could be placed in regular memory as well if necessary.

Of course, the System/360 computer, announced in 1964, but first installed in 1965, made extensive use of microprogramming in its design, but the Packard-Bell 440 was intended to be user-microprogrammable, which was unique at the time, and which continued to be rare subsequently.

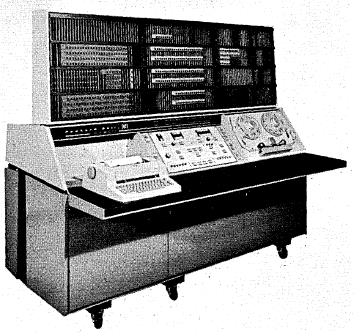

IBM did allow some academic researchers to microprogram some models of the IBM 360 series, under non-disclosure agreements. As well, the book Microprogramming: Principles and Practices by Samir S. Husson gave basic descriptions of the microprogramming architecture of several IBM 360 models, as well as the RCA Spectra 70/45 and the Honeywell H4200.

Later, some 16-bit minis also offered user-microprogrammability.

The documentation for the GRI-909 computer didn't mention microprogramming, but the instruction set for that computer was not at all like that of a typical computer, and instead was much more like a repertoire of microinstructions.

The Nanodata QM was a user-microprogrammable machine available during the 1970s.

But perhaps the most memorable of the user-microprogrammable computers available in that era were those by the Microdata corporation.

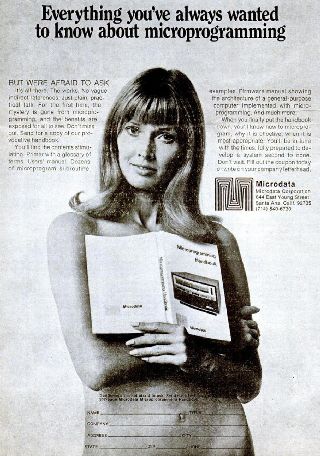

Advertisements which invited people to send in for a free copy of their Microprogramming Handbook, which described how to use the various computers in their line of products called attention to the status of microprogramming up until that time as largely a secret "black art", as it tended to be viewed for the reasons noted above... as well as tellingly indicating that the field of computing, despite Ada Augusta, Countess of Lovelace, and Admiral Grace Hopper (U.S.N.), and even Margaret Hamilton at NASA, was still very much a male-dominated field.

Yes, it is now actually on-topic here for me to spice up this page with two of the most infamous advertisements in the history of computing!

The photo shown at right, and the one below, both from brochures, illustrate the ASI Advance 6020 computer by Advanced Scientific Instruments, a subsidiary of Electro-Mechanical Research. Note that the machine is illustrated with a very different style of front panel in the two images. There was also a larger, compatible ASI 6030; both were introduced in late 1964, and some larger models, the 6040, 6050, and 6070 came a few months later in 1965.

This computer, like the Datacraft 6024 described below, had the opcode followed by indirect and index bits, and used sign-magnitude representation of integers. However, they were different computers with distinct instruction sets.

This photo from a brochure illustrates System Engineering Laboratories' SEL 840 computer. It was first installed in 1965, and the earliest advertisements for it appear to be from spring of that year.

Like the SDS 9300 and the DDP 24, this computer had the basic layout for its instruction words that I initially noted as a typical example.

Also, like the SDS 9300, single-precision floating-point numbers were 48 bits long, and double-precision floating-point numbers were 72 bits long. However, in both cases, the sign bit of all the words in the number except the first word were left unused to simplify floating-point arithmetic (this is despite the fact that the computer used two's complement arithmetic rather than one's complement or sign-magnitude; the latter two kinds of integer arithmetic introduce inconsistencies which make this very helpful for software implementations of floating point).

Also first announced in 1964, the Honeywell 300 computer was advertised as a low-cost computer for scientific workloads.

The index field in its instructions, like that of the Control Data 924, indicated indirect addressing with the value 7. One important new feature of this computer that was noted in its advertising was that it used ferrite cores including lithium in their composition; this meant that it was no longer necessary to either refrigerate or heat the cores in order to keep their electrical characteristics constant.

The Scientific Control Corporation SCC 660 computer was also first installed in 1965. It is illustrated below:

The instruction format of this computer was:

This machine, with only one index register, and which used the bit so saved to indicate programmed operations, is reminiscent of the SDS 900 series machines, except for being improved by not leaving one bit of the instruction unused so as to allow a more convenient and compact object file format.

Datacraft made the DC 6024 computer, and developed improved models of it over time.

The original form of the first Datacraft 6024 computer is shown below:

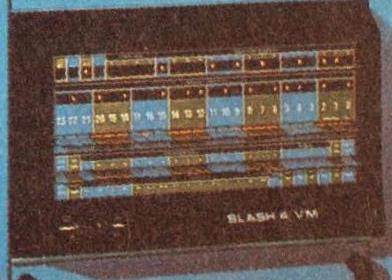

Shortly after it developed the DC 6024/4, the company was purchased by Harris, and the computer was renamed the Harris SLASH 4. The front panel of that computer is shown at right.

Its instruction format was equivalent to the typical one I gave as an example above, but the fields were re-ordered:

Originally, single-precision and double-precision floats were both 48 bits long, with the other bits in the word containing the exponent unused for single-precision floats, as was the case for many 24-bit machines.

However, the Harris 800, a later machine in this line, added a 96-bit floating-point format.

A 24-bit computer first introduced in 1970 happens to be particularly interesting and noteworthy from my point of view for a number of reasons.

The computer is the System IV/70 from Four-Phase Systems.

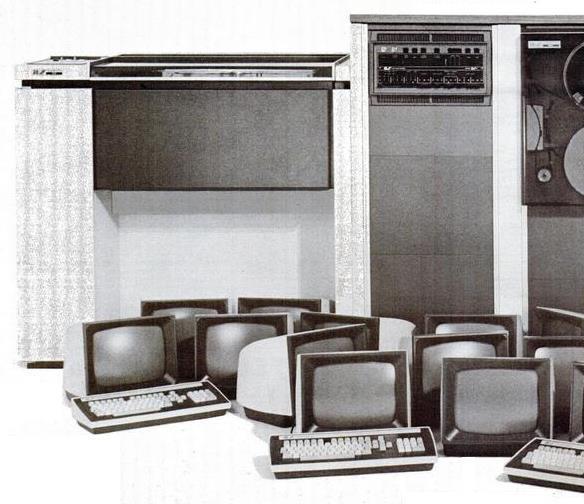

Here is part of an image from an advertisement of a system using that computer,

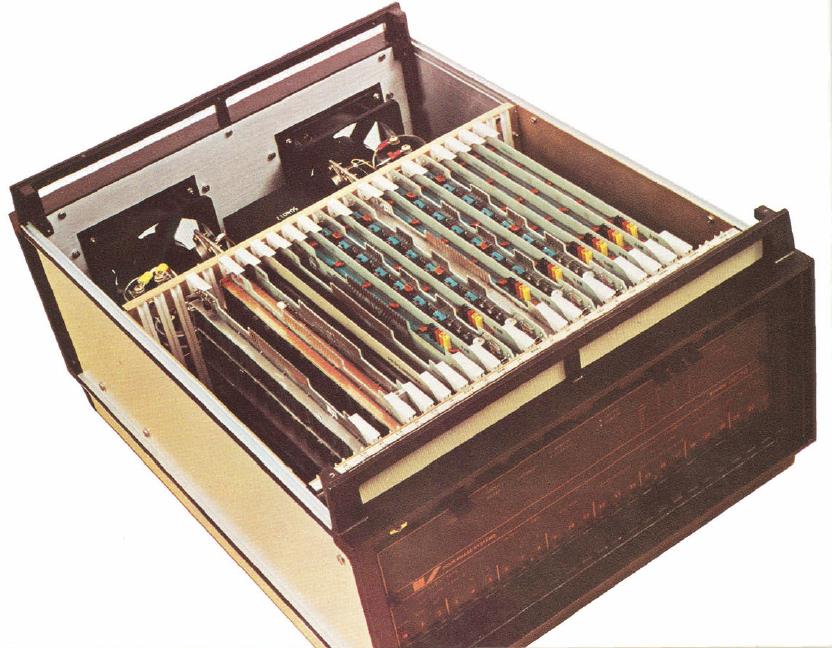

and here is a close-up look at the CPU from a brochure:

This computer is noteworthy for making use of very advanced VLSI for its time. It was built around a bit-slice chip, called the AL1, which Four-Phase Systems designed in-house for its own internal use.

This chip was made possible by the technique which gave the company its name, four-phase logic. Four-phase logic was a design approach which simplified the design of chips which used dynamic logic, for example, using the capacitance in the interconnects between gates on the chip as a buffer stage.

Using dynamic logic, rather than a naïve conventional approach involving static logic had benefits which were, at one point, stated as increasing chip speed and density by a factor of ten, and reducing chip power drain by a factor of ten as well.

This technique was invented at Autonetics.

And this computer happened to salute another innovation, even if a more dubious one, from Autonetics as well! In order to keep the amount of additional circuitry over and above the three bit-slice chips to an absolute minimum, while hardware floating-point (in the sense, I presume, of microcode for floating-point instructions) was included, both the 48-bit single-precision floating-point format and the 72-bit double-precision floating-point format used a whole 24-bit word for the binary exponent (including its sign). This meant that floating point numbers on this system had a range from 10^-2,525,223 to 10^+2,525,223. Not quite as large as that of floating-point numbers on the RECOMP II, which reserved a whole 40-bit word for the exponent, again to simplify its implementation, but the same technique gave the same sort of result.

At least they never ran any advertisements claiming this design compromise to be a major feature.