Just as transistors replaced vacuum tubes, integrated circuits then replaced discrete transistors.

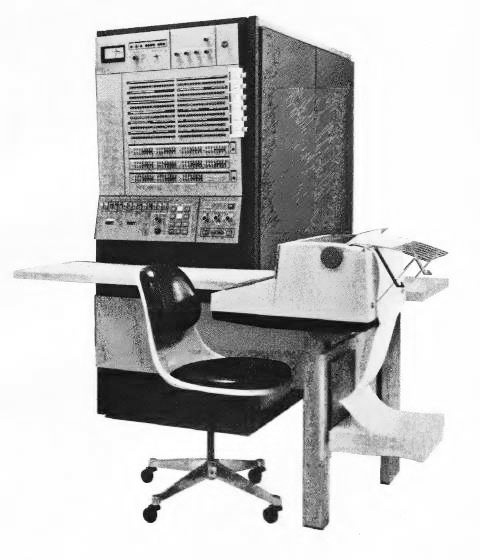

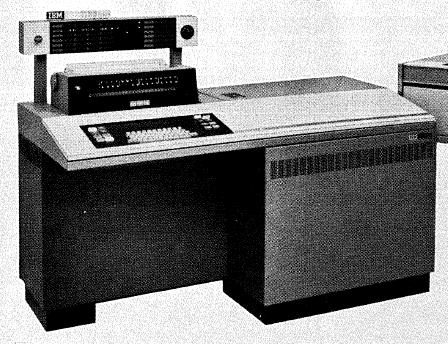

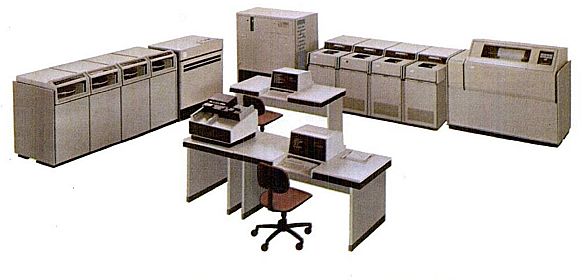

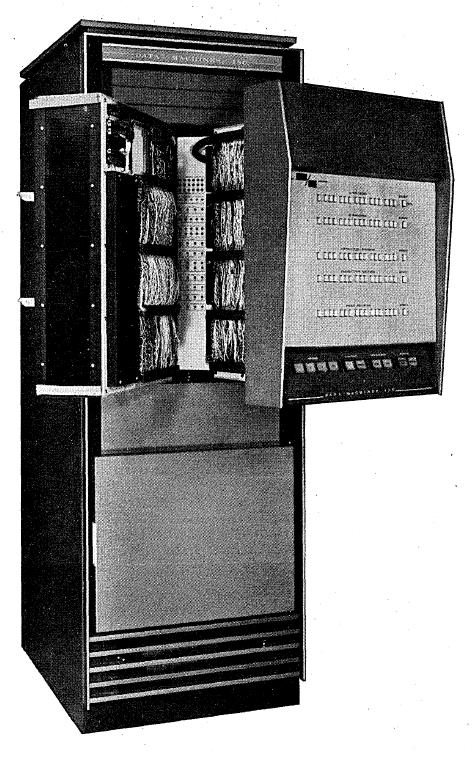

Another major computer milestone took place on April 7, 1964, when IBM announced their System/360 line of computers. An image of a System/360 Model 65, from an advertising brochure for a third-party communications processor for System/360 computers, is shown at left. The 360/65 was microprogrammed, and had two internal ALUs; one was 60 bits wide, and used both for integer arithmetic on values up to 32 bits long, and for the arithmetic on the mantissa portions of floating-point numbers, and the other was 8 bits wide, and used both for binary arithmetic on the exponents of floating-point numbers, and for performing packed decimal arithmetic two digits at a time. On the right, an image of the System/360 Model 75 is shown; this was the top-of-the-line system in their initial lineup.

These computers used microprogramming to allow the same instruction set, and thus the same software, to be used across a series of machines with a broad range of capabilities. The Model 75 performed 32-bit integer arithmetic and floating-point arithmetic, both single and double precision, directly in hardware; the Model 30 had an internal arithmetic-logic unit that was only 8 bits wide.

The initial announcement of the IBM System/360 referred to the models 30, 40, 50, 60, 62, and 70. There was some time between when the announcement was made, and the first System/360 computers were ready to be shipped; as a result, advances in core memory technology led to Model 60 and Model 62, two versions of the same computer with different speeds of core memory, being replaced by the Model 65, with still faster core memory (the model 65 had a memory cycle time of 0.75 microseconds, whereas the model 60 was to have memory with a cycle time of 2 microseconds, and the model 62 was to have memory with a cycle time of 1 microsecond), and the Model 70 being replaced by the Model 75, again with faster core memory (again 0.75 microseconds, as against 1 microsecond for the model 70).

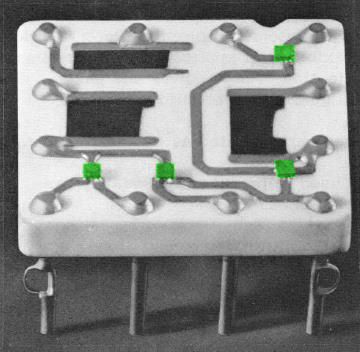

The IBM System/360 was billed as using integrated circuits rather than discrete transistors, and this was generally accepted at the time. However, instead of using monolithic integrated circuits, its integrated circuits were of a type that IBM termed Solid Logic Technology. An IBM image of such a circuit is shown at right. Easily visible in the image are three resistors, the black areas between the metal traces on the alumina substrate. The small square transistors, to each of which three traces go, are not as easily visible, so I have retouched the black-and-white image with a splash of color, so that each of the four transistors present is highlighted by being green in color.

Later, a System/360 Model 67 was introduced; this, however, was not a Model 65 with faster memory, instead it was a Model 65 with the addition of Dynamic Address Translation, which allowed the computer to use a swap file and run time-sharing operating systems, not only ones from IBM, but also third-party ones such as the Michigan Terminal System, developed at the University of Michigan, in cooperation with the other universities that used it, such as Wayne State University, the Rensselaer Polytechnic Institute, the University of British Columbia, Simon Fraser University, the University of Alberta (Edmonton), the University of Durham, and the University of Newcastle upon Tyne, which shared its computer with both the University of Durham and Newcastle Polytechnic. (At the time, the University of Calgary was known as the University of Alberta (Calgary), as its computer was a Control Data 6600, MTS was not an option for them.) Michigan State University also ran MTS, but without actively participating in its development, and it was also used by Hewlett-Packard and by the Goddard Space Flight Center at NASA.

By this time, IBM was already the dominant computer company in the world. The IBM 704, and its transistorized successors such as the IBM 7090, helped to give it that status, and the IBM 1401, a smaller transistorized computer intended for commercial accounting work, was extremely successful in the marketplace.

The System/360 was named after the 360 degrees in a circle; the floating-point instructions, and commercial instructions to handle packed decimal quantities, were both optional features, while the basic instruction set worked with binary integers; so the machine was, and was specifically advertised as, suitable for installations with either scientific or commercial workloads. And because the related features were options, it was not necessary for one kind of customer to pay extra to be able to handle the other kind of work.

However, the fact that IBM's computers were more expensive than those of its competitors somewhat limited the benefit of being able to use the same computer for these two divergent workloads. Grosch's Law - the fact that a bigger computer is a better bargain - did mean that one computer able to do twice as much work was cheaper than two; but the real benefits of combining scientific and commercial workloads on one machine were in other areas: flexibility of scheduling, ease of training, the need for only one set of staff to operate the computer (mounting tapes, handling printouts, and so on).

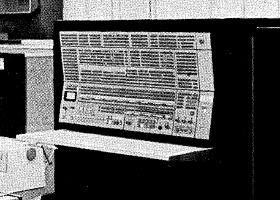

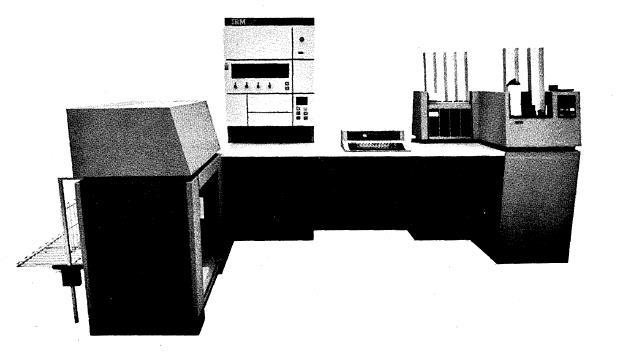

IBM succeeded the System/360 series with System/370, announced on June 30, 1970. Several models of that series were improved versions of corresponding models of System/360. All the System/370 models used monolithic integrated circuits for their logic, rather than SLT (Solid Logic Technology), used by most System/360 models; some also used semiconductor main memory instead of core memory. While System/370 mainframes largely resembled System/360 mainframes, they had a different color scheme, being black instead of light beige. A System/370 Model 155 console is pictured at right, and a model 165 is pictured below. Both of these systems were announced on June 30, 1970.

The System/370 Model 165 closely resembled its predecessor, the System/360 Model 85, both in physical appearance and in internal logic design; even its microprogram words were the same length. The later Model 168 was a modified version of the Model 165 which offered the feature of virtual memory, it was announced on August 2, 1972, along with the Model 158, a version of the 155 also modified to offer virtual memory; this allowed programs to be written to make use of more memory than may have been actually available, with the computer being able to automatically make use of a swap file on disk in a fashion transparent to the program. Of course, today, a swap file is a routine thing that is taken for granted, and the concept was first introduced in 1962, on the Manchester ATLAS computer, under the name "single-level store".

Despite this, it remained a significant step for IBM to bring this feature into the mainstream of computing... although an article about IBM's introduction of virtual memory, which appeared in the February, 1973 issue of Datamation, was titled Today IBM Announces Yesterday.

Of course, however, IBM itself had previously offered paged memory as well, with the IBM System/360 Model 67, announced on August 16, 1965 and first shipped in May, 1966.

Among the many innovations from IBM that advanced the state of computing, one can note their invention of the vacuum column which significantly improved the performance of magnetic tape drives for computers; their 1403 line printer was legendary for its print quality and reliability, and they invented the hard disk for the RAMAC vacuum-tube computer from 1956. Much later, they also invented the original 8-inch floppy disk as a means of storing microcode for several models of the IBM System/370 series, such as the model 145. Also, the Tomasulo algorithm, first implemented in the IBM System/360 Model 91, was another IBM invention which made modern computers with out-of-order execution possible. (The Control Data 6600 had a partial implementation of out-of-order execution which was able to deal with some kinds of pipeline hazards, but not pipeline hazards of all the fundamental types.)

As a consequence of IBM's major presence in the computer industry, their computers were very influential. Before the IBM System/360, nearly all computers that worked with binary numbers (many instead worked with decimal numbers only) had a word length (the size of numbers they worked with) that was a multiple of six bits. This was because a six bit character could encode the 26 letters of the alphabet, 10 digits, and an adequate number of punctuation marks and special symbols.

The IBM System/360 used an eight-bit byte as its fundamental unit of storage. This let it store decimal numbers in packed decimal format, four bits per digit, instead of storing them as six bit printable characters (like the IBM 705 and the IBM 1401 computers, for example).

To clarify: some decimal-only computers like the IBM 1620, the IBM 7070, and the NORC, also by IBM, and the LARC from Univac, had already been using packed decimal; and the Datamatic 1000 by Honeywell, a vacuum-tube computer with a 48-bit word, used both binary and packed decimal long before the System/360 came along. And the IBM System/360 byte, capable of containing either one eight-bit character or two packed decimal digits, was even more closely anticipated by the slab of the NCR 315 computer, which was 12 bits in size, and which contained either two six-bit characters or three four-bit packed decimal digits.

So I'm not saying that IBM invented the idea of using no more bits than necessary for storing decimal numbers in a computer; that was obvious all along. Rather, what I'm trying to say is that IBM's desire to use this existing technique led them to choose a larger size for storing printable characters in the computer. This larger size made it possible to use upper and lower case with the computer, although lower case was still initially regarded as a luxury, and it was not supported by most peripheral equipment for the IBM System/360 which handled text.

Generally speaking, computers prior to the System/360 referred to the six-bit area of storage which could contain a single printable character as a character. The System/360 documentation referred to the eight-bit area of storage that could contain a character on that machine as a byte rather than a character. Why?

One obvious reason is to avoid confusion with other computers where the term character refers to a different amount of space.

As well, the 8-bit byte was chosen for the System/360 because in addition to containing a character, it could also contain two decimal digits in binary-coded decimal (BCD) form. Thus, a byte was an area of storage intended to hold other things besides characters.

This kind of flexibility is not precluded by the use of six-bit characters; in 1962, as noted above, the NCR 315 computer had as its basic unit of storage the slab which could contain either two six-bit characters, or three BCD digits.

The word byte was not coined for the IBM System/360, however. Instead, it is generally believed to have originated at IBM for use with the IBM 7030 computer (also known as the STRETCH). That computer had a 64-bit word, but it had bit addressing, and so the size of a byte was variable rather than fixed.

The term "byte" was also used in documentation for the PDP-6 (which had the PDP-10 as a compatible successor); on that machine, byte manipulation instructions could operate on any number of bits within a single 36-bit word, but they could not cross word boundaries, unlike the case of the STRETCH.

As well, in his set of books The Art of Computer Programming, Donald E. Knuth devised a simple hypothetical computer architecture to allow algorithms to be presented in assembler language. To avoid making his examples specific to either binary or decimal computers, he defined the memory cells of MIX, called bytes, as having the capacity to hold anywhere from 64 to 100 different values. So four trits in a ternary computer, with 81 values, would also qualify (and I remember reading of a professor who implemented MIX with that byte size in a simulator program, so as to validate programs in the MIX language submitted by students).

So one competing definition of a byte - but specific to the MIX architecture - existed that specifically excluded eight-bit bytes; and I remember that this led to someone writing a letter to BYTE magazine saying they were mistaken in using that term in connection with all those 8-bit chips out there!

Despite the PDP-6, though, it definitely was the IBM System/360 that put the word "byte" into the language, making it familiar to everyone who worked with computers. And it is almost universally thought of as referring to eight bits of memory. Despite this, to completely eliminate any possibility of ambiguity, many international communications standards use the term "octet" to refer to exactly eight bits of storage. This may also have to do with the standards bodies being European, and IBM being an American company, of course.

One could have claimed that the PDP-10 (and, of course, its prececessor, the PDP-6, and its successor, the DECsystem-20) was the only machine to use "real" bytes, because it was the only machine to follow, even if imperfectly, the model of the STRETCH, for which the term was coined!

On the other hand, one of the most obvious examples of IBM's influence in popularizing the eight-bit byte was when the Digital Equipment Corporation brought out their PDP-11 computer, with a 16-bit word, in 1970; their previous lines of computers had word lengths of 12, 18, and 36 bits. This will be more fully covered in a later section devoted to the minicomputer.

The IBM STRETCH computer from 1961, one attempt by IBM to design a very fast and powerful computer, was a disappointment for IBM, just as the IBM 360/91 was. On the other hand, the IBM 360/85 performed better than expected, which led to IBM adding cache to the IBM 360/91 to correct its performance. The resulting improved computer was designated the IBM System/360 Model 195.

The image below shows how the IBM System/360 Model 195 was pictured in one IBM advertisement:

This implementation of the System/360 from 1969, since it combined cache memory, introduced on the large-scale microprogrammed 360/85 computer, and pipelining with reservation stations using the Tomasulo algorithm, equivalent to out-of-order execution with register renaming (which is the more common version of out-of-order execution used by other computers), introduced on the 360/91 (and used on both the 91 and the 195 only in the floating-point unit). This was a degree of architectural sophistication that would only be seen in the mainstream of personal computing with microprocessors when the Pentium II came out (the nearly identical Pentium Pro being somewhat outside the mainstream).

Today, because of the continued improvements in semiconductor fabrication since the Pentium Pro was made in 1995, nearly all microprocessors, even the small low-power ones used in smartphones, have both on-chip cache and out-of-order execution.

So, when IBM came out with the IBM System/360 Model 195, they could have, quite truthfully, said in their advertisements that "Someday, all computers will be made this way," even if there are indeed still a few microcontrollers and embedded processors that don't include those features.

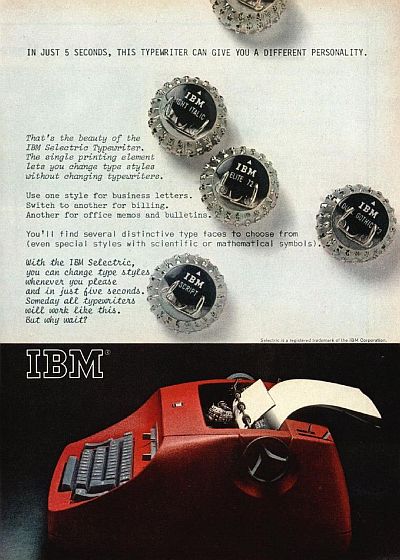

However, they didn't, as this could hardly have been anticipated at the time. Instead, as the advertisement shown at right documents, IBM did say "Someday, all typewriters will work like this," about the IBM Selectric.

That turned out to have been overoptimistic. When the patents expired, quite a few companies did make single-element typewriters similar to the IBM Selectric: Adler, Facit, Olivetti and Brother, among others. However, they continued to make ordinary typewriters as well, and other typewriters did not bother; instead, it was the daisywheel which ended up making any other kind of typewriter obsolete. Of course, with interchangeable daisywheels, moving instead of the carriage, a daisywheel typewriter still had a lot in common with the IBM Selectric that preceded it.

Simple pipelining, where fetch, decode, and execute of successive instructions was overlapped, had been in use for quite some time; splitting the execute phase of instructions into parts would only be useful in practice if successive instructions didn't depend on one another. The IBM 7094 II was one example of a computer from IBM that used this technique.

Incidentally, although the System/360 Model 85 computer was the first computer sold commercially with cache memory, the concepts behind cache memory were first outlined in a 1962 paper by Leon Bloom, Morris Cohen, and Sigmund Porter, all of whom worked for NCR. However, their paper envisaged a very small cache memory with only a handful of words.

And, of course, many computers, before cache memory came along, had small, fast memories that were explicitly managed by the programmer.

Although IBM designed the IBM System/360 computer to replace all the existing computers which they offered, which had many different instruction sets, not long after the introduction of the IBM System/360, in early 1965, they brought out an inexpensive computer with a 16-bit word, the IBM 1130, which had its own different instruction set.

This was a replacement for the IBM 1620, aimed at small-scale scientific computer users.

It was several years later that IBM brought out an incompatible computer aimed at commercial computer users, at least if you don't count the IBM System/360 Model 20, which, while a member of the System/360 product line, was not fully compatible with the standard System/360 instruction set. This computer was the IBM System/3, and is particularly notable for introducing the 96-column punched card to the industry.

A successor to the System/3 was the System/34, from 1975. This computer used the System/3 instruction set, implemented using microcode.

Its performance was felt to be disappointing, so a later machine, the IBM System/36, while retaining the System/3 instruction set, and continuing to implement it in microcode, added a second, faster, processor on which the operating system ran.

One good point it had, if a minor one, was that its keyboard was very nice. It resembled that of the original IBM PC, with ten keys in two columns of five at the left, and a numeric keypad on the right, but since it predated IBM's adoption of ASCII, the main typing area was that of a 44-key typewriter, like that of a Selectric or Selectric II typewriter.

Then the next system intended as a successor in this lineage, the IBM System/38, had a new instruction set derived from IBM's Future Systems project; that was continued on into the IBM AS/400 computer and IBM's present day System i line-up (which recently changed from being a line of computers to a line of software for their System x computers based on the PowerPC chip).

Of course, a truly vast number of different computers were made from integrated circuits by other manufacturers. One of which I was able to find a good photograph was the Burroughs B1700, one of their small-scale systems aimed at business customers. Thus, it directly competed with the IBM System/3 and its successors shown above.

Another example of a third-generation computer, other than a minicomputer, to be covered on the next page, that didn't come from IBM is the later KI10 version of the PDP-10, the original version with the KA10 chassis having been made with discrete transistors.

The KI10, which was made with TTL logic, was succeeded by the KI10, made from ECL, which was microcoded instead of hardwired, like previous models of the PDP-10.

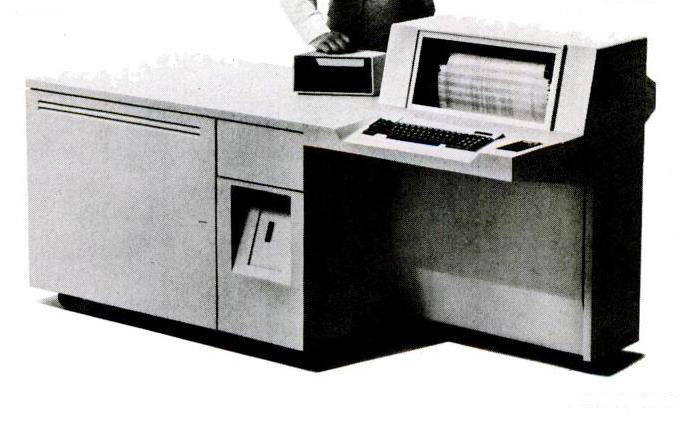

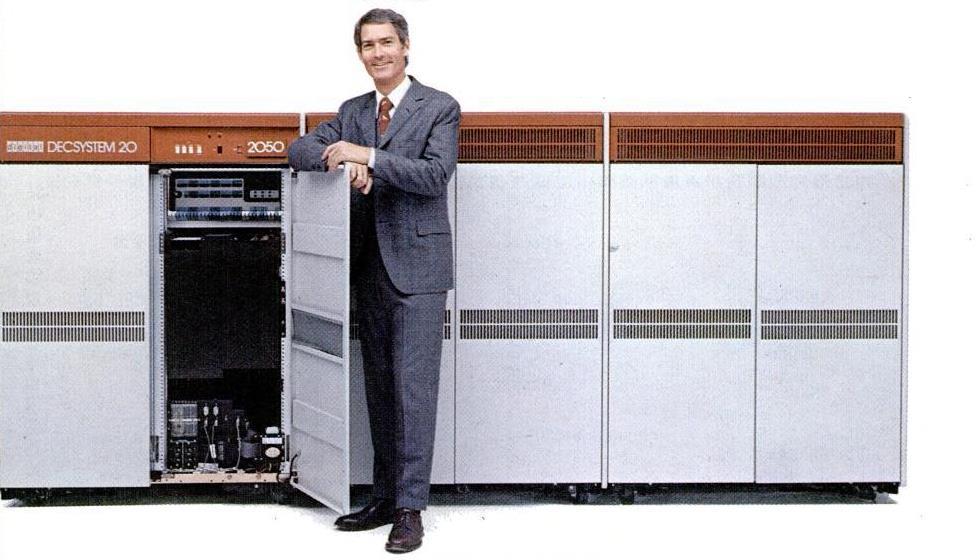

The picture below, from an advertisement

is of the DECsystem 2050, which was internally very much the same as the KL10 version of the PDP-10, but packaged in less tall racks. That it is similar to the KL10 is apparent, as its PDP-11 based peripheral processor is shown in this image.

And here's a photograph of an NCR Criterion system:

This computer used the ASCII character set, and so it stored characters in 8-bit bytes, also using packed decimal, like the NCR Century before it, to which it was a compatible successor.

It may be noted that IBM introduced its z/Architecture in the year 2000; this extension of the System/360 mainframe architecture provided 64-bit addressing. The first machine on which it was implemented was the z/900. The z/900 was announced in October, 2000, and was to be available in December, 2000.

The 64-bit Itanium from Intel only became available in June, 2001, and the first chips from AMD that implemented the x64 extension to the 80386 architecture, that Intel later adopted as EM64T, were shipped in April, 2003.

However, AMD had releasd the x64 spec in 1999, and this was after Intel had described the Itanium, as it was a reaction to Intel's way of moving to 64 bits.

Thus it seemed that the microprocessor beat the mainframe to 64-bit addressing, thus making it an embarassment that IBM's big mainframes couldn't do something that was being offered in tiny desktop microcomputers, but in fact the 64-bit z/Architecture mainframe was delivered first.

However, there are other microprocessors besides those which are compatible with the Intel 80386. The Alpha microprocessor (which was only ever a 64-bit machine from the start) was introduced in 1992, and Sun adapted its SPARC architecture to 64-bit addressing in 1995, so IBM, as well as Intel and AMD, was anticipated by microprocessors used in servers and high-end workstations.

A few words about a subject neglected in other parts of this history of the computer are in order here. A number of innovations important to the computer revolution came from Bell Labs over the years.

In 1948, the transistor was invented by Bardeen, Brittain, and Shockley, at Bell Labs.

The UNIX operating system was developed at Bell Labs. Initial work started on a PDP-7 in 1969, and then work continued on a PDP-11. A version of Unix for the PDP-11 was distributed by Bell Labs to academic users only on a non-profit basis. Later, UNIX became generally available once it was possible to do this and satisfy the requirements of an antitrust consent decree that the Bell Telephone Company was under.

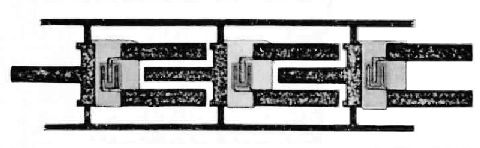

Just as IBM, because it had greater economies of scale, was able to use integrated circuits in its System/360 line of computers when monolithic integrated circuits were still too expensive to be practical, by developing its own type of integrated circuit, Solid Logic Technology, Bell also developed its own kind of integrated circuit. What they originated were Beam-Lead integrated circuits, invented by M. P. Lepselter, which can be considered to be a forerunner of Silicon-on-Sapphire (SOS) and Semiconductor-on-Insulator (SOI) integrated circuits; in a Beam-Lead integrated circuit, the interconnects between components were heavy enough to provide structural stability to the circuit, while the silicon not forming a part of components was removed.

Shown above is an image from a Bell Labs advertisement from 1966 of a test strip containing three beam-lead transistors.

Another important computer-related innovation from Bell was their Electronic Switching System. It used a computer designed for extremely high reliability. Programs were stored in a separate address space, making the machine one with a Harvard architecture, on a read-only memory. The memory used for them was magnetic in nature, consisting of metal cards, which could be removed to be written on a separate device so that the ESS 1 could be re-programmed, thus it was a writeable read-only memory in the way that an EPROM (Erasable Programmable Read-Only-Memory) chip would later be.

I believe I read that a later iteration of the ESS used normal read-write memory for programs, but this memory was connected to the computer which performed the switching of telephone calls so that it could only read it. A second computer, with its own program memory, was also connected to that memory, and it was used when the program for telephone switching needed to be updated or altered, or simply reloaded.

It is my opinion that this design should be taken as a source of inspiration these days, given all the problems we have with computer viruses and other attacks on computers connected to the Internet.

Imagine if one had a computer system which consisted of two connected computers. One of those computers had the hard drive, the USB ports (and, once upon a time, the floppy drives), the CD-ROM drive (or, these days, DVD-ROM or Blu-Ray, of course) as peripherals, and the other one had a big chunk of memory that was read-only to it, but writeable by the other computer - and all the connections to the Internet, such as the Ethernet ports (and, once upon a time, the modem), and the computer's wi-fi capability as its peripherals.

So the web browser, and any other Internet software, such as an E-mail client or an FTP client, would run on the second computer, after being retrieved from disk on the first computer.

Visit a web page, or get an E-mail, that causes a buffer overflow, or leads to your computer getting a malformed packet, and it's running on a CPU that has zero access to the hard drive, zero access to the system software that retrieves programs from the hard drive to run on either computer. Of course, though, when one wants to download a file, then there have to be links between the two computers to allow data to travel from the Internet-connected computer to the hard disk; but those links would be firmly under control of the first computer.

So, of course, if a user is persuaded to download and install harmful software, attacks on computers could still take place. But the kind of attacks that involve no user interaction would be much more difficult.

Although this page is titled "The Third Generation", so far it has dealt with the System/360 computer, which indeed did exclusively use integrated circuits... of a sort... and the consequences of its introduction. The Control Data 6600 and the PDP-6, as well as the original KA-10 version of the PDP-10, mentioned above, were made with discrete transistors.

As noted, since IBM was the biggest computer company, it had the economies of scale that made their Solid Logic Technology a feasible option.

IBM was able to produce its own integrated circuits before monolithic integrated circuits were available at prices suitable for use in civilian computers. This was IBM's Solid Logic Technology, which involved automated manufacturing of chips where silicon dies containing individual transistors were placed on a tiny printed circuit on an alumina substrate.

In conventional transistor circuitry, each transistor was in a package, such as a TO-5 can; in SLT, the dies were placed on the circuit, and the whole assembly was then passivated and packaged as a unit. This is why SLT is legitimately regarded as a form of integrated circuit; even though it may only be a halfway step between conventional transistors and monolithic integrated circuits, a big gain in compactness was obtained by putting multiple logic gates in a single package, leaving the further gains obtained by monolithic integrated circuits as a fraction of the total difference between them and discrete transistors.

Let's look at the story of integrated circuits in the rest of the computer industry now.

Sometimes, you may read that the integrated circuit was a result of the Apollo space program. The real story, though, is a bit more complicated than that; the Apollo program did play a genuine role, but so did the requirements of the U.S. military. Those may be a bit less glamorous, and also less comfortable or more controversial, but to gloss over this would be inaccurate.

The Minuteman II missile included a guidance computer that used integrated circuits, and it was the orders for integrated circuits that this engendered that enabled the microelectronics industry to take its first steps.

But even after those orders were filled, Fairchild and other early makers of integrated circuits still weren't ready to take on the commercial market, as they were still too expensive for that.

The U.S. Air Force made more early orders for integrated circuits, for example, for computers to be used in military aircraft.

For several years, however, NASA did purchase more integrated circuits than the entire U.S. Air Force, so the role of the space program was also significant, and that included Apollo itself as well as space missions that preceded it.

It was only after the integrated circuit industry had the military and NASA as its main customers for several years that they were finally producing integrated circuits at a low enough cost that private industry could consider making use of them.

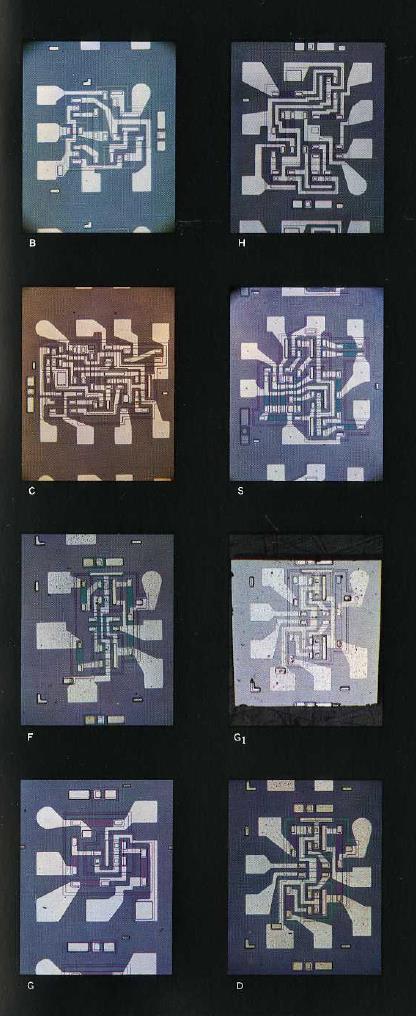

And several types of monolithic integrated circuits were developed as the technology gradually improved. The first ones from Fairchild employed RTL, resistor-transistor logic; initially, their Micrologic line consisted of four types of integrated circuit.

The image at right, from an advertising brochure for Fairchild's Epitaxial Micrologic, shows images of the eight types of integrated circuit in a somewhat later improved form of their early RTL integrated circuits. The innovation here is that instead of the components being placed directly on an N-type substrate, the substrate was P-type, and an N-type well was first fabricated on the substrate before the components were fabricated in that well. This provided better electrical isolation.

The dies are labelled as follows:

The first integrated circuit introduced was the type F flip-flop; types G and S appear to have been added next, and then several months later, types B, H, and C were added to the available product line.

Epitaxial micrologic added types G1 and D.

Texas Instruments was the supplier of the chips used in the guidance computer of the Minuteman II missile; these chips were also RTL chips.

At Texas Instruments, the DCTL Series 51 chips were developed to meet the requirements of the Optical Aspect Computer used in the first Interplanetary Monitoring Platform satellite, launched in 1963.

Delco was the company that the Air Force selected to build the Magic series of computers used in military aircraft. The initial model of computer in that series used Fairchild Micrologic integrated circuits.

Both versions of the Apollo Guidance Computer, Block I and Block II, used for unmanned and manned Apollo flights respectively, used RTL (Resistor-Transistor Logic) made by Fairchild.

Of course, though, the logic family most identified with the era of SSI (Small-Scale Integration) was TTL (Transistor-Transistor Logic). And the best-known representative of that was the 7400 series of integrated circuits, supplied by National Semiconductor and others. The first line of TTL integrated circuits offered for sale, however, was called SUHL, for Sylvania Universal High-Level Logic, and, as the name says, it came from Sylvania.

Fairchild and Texas Instruments were the two companies that each independently invented one form of the integrated circuit. Later, IBM and Bell Labs, as noted elsewhere on this page, invented other forms of integrated circuit.

Fairchild, in particular, has a very important place in the history of the microelectronics industry in Silicon Valley. It was founded by eight engineers employed by William Shockley, one of the inventors of the transistor; there were personality conflicts, and they also felt that he was unwilling to exploit the potential of discoveries that opened a path to producing integrated circuits.

These engineers then left Fairchild as well, but not by choice; the venture capitalist who lent them the money to start the company bought them out when he felt that having a lead in integrated circuits meant that the company no longer needed the highest level of engineering talent. This was, of course, a serious mistake, as the rate of progress in microelectronics meant that any lead is quite temporary, unless a company continues to develop improved technology at a fast rate.

Gordon Moore and Robert Noyce went on to found Intel.

Other important microelecronics companies founded by people from Fairchild included Signetics, National Semiconductor, AMD, and Four-Phase Logic. Many other companies can trace their lineage back to Fairchild, such as Zilog, Xilinx, Chips & Technologies, and 3Dfx.

And so I finally get around to mentioning a few more third-generation computers other than the IBM System/360, in addition to the very limited number of examples which I recently added to the page above. Most of them will be minicomputers, although I talk about the phenomenon of the minicomputer (which had its beginnings during the era of the discrete transistor) on the next page.

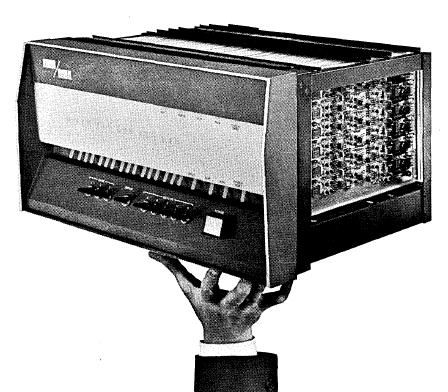

As an illustration of the change wrought by integrated circuits, an image of the Varian DATA/620 computer, made with discrete transistors, from one advertisement, is shown on the left, and an image of the Varian 620/i, now made with integrated circuits, from an advertisement from April, 1967, is shown on the right. The width of both computers is the same, as the 620/i, like most minicomputers, is designed to fit in standard 19-inch rackmount enclosures.

This advertisement shows the 620/i being held in one hand to emphasize its new smaller size.

Incidentally, the Varian 620 and 620/i computers had 16-bit instructions, but could optionally have 18-bit wide memory and an 18-bit ALU instead of 16-bit wide memory and a 16-bit ALU. This option was dropped for the later 620/L and 620/f models, which were only available in the 16-bit configuration.

A modified form of this idea resurfaced many years later, in the General Instrument CP1600 microprocessor. This microprocessor used instructions that were 10 bits wide, but it dealt with data that was 8 bits wide or 16 bits wide.

The chip could be configured with memory that was 16 bits wide; in which case, data would be fetched 16 bits at a time, and a 16 bit word would be used to contain an instruction, with the high six bits unused. Or it could be configured with memory that was 10 bits wide; in that case, data would be fetched 8 bits at a time, and memory containing data would have the high two bits unused out of each 10-bit memory word.

It has been noted above that the IBM System/360 was very influential. Eventually, other companies, such as Amdahl and Itel would make plug-compatible computers that were clones of the IBM System/360; before that happened, several companies made imitations of the IBM System/360 that were not fully compatible.

The RCA Spectra 70 series, announced in 1965, and first delivered in 1966, were computers which were partially compatible with the IBM System/360, having the same set of instructions available for the user, but different privileged I/O instructions, so they provided their own operating systems. These computers made use of monolithic integrated circuits in the models 70/45 and 70/55, while the smaller scale 70/15 and 70/25 in their initial line-up were made with discrete transistors.

Initially, the computers of the Univac 9000 series were designed at Univac (later, they would buy the Spectra series from RCA, to create the Univac 90 line); in addition to the 9400, pictured at left, which implemented a subset of the System/360 instruction set, they made both the 9200 and 9300, which replaced their 1050, which implemented the System/360 Model 20 instruction set. IBM only made a PL/I compiler for the System/360 Model 20; Univac made a FORTRAN compiler for the 9200 and 9300.

The Omega 480 from Control Data, pictured at right, at first looked to me as though it might have been a rebadged computer from Univac, as the style of the front panel looked very similar; but unlike the Univac machines which resembled the System/360, the Omega 480 was capable of running IBM operating systems. As well, the Series/90 systems that were contemporary with it did not have a similar front panel layout.

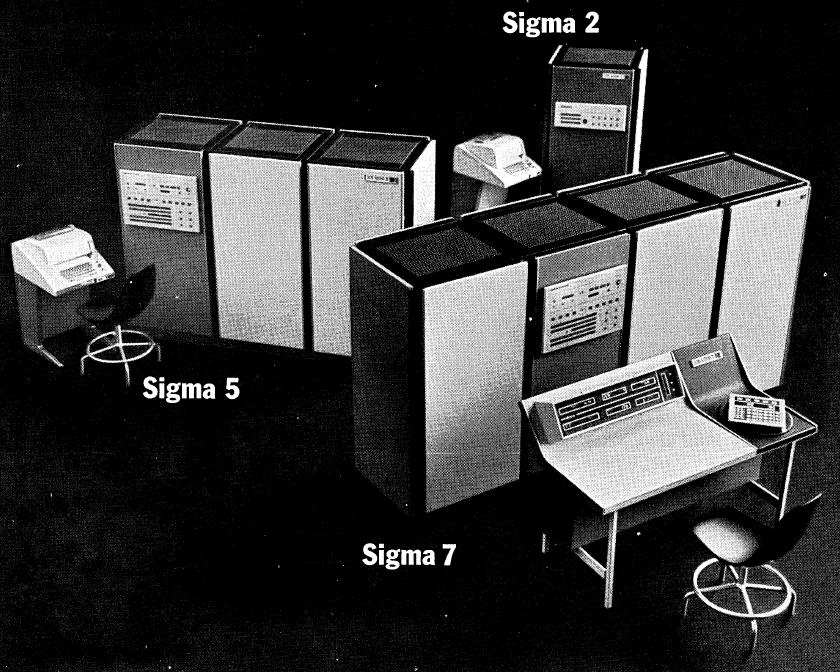

SDS made the Sigma series of computers; these didn't have the same instruction set as the System/360; instead, all their instructions were 32 bits long. But they were designed to use nearly the same data formats (negative floating point numbers were complemented, however) and to offer equivalent features (for example, they had 16 registers, they used EBCDIC as their character set). Shown below is part of an advertisement for the Sigma line from 1967; the Sigma 2, seen in the photograph, was not compatible, but was instead a 16-bit system.

The Sigma could also be viewed as demonstrating that an equivalent to the System/360 could be made using 7090-era technology; however, they were made using monolithic integrated circuits, although the occasional discrete transistor was used as well for appropriate purposes, such as the hammer drivers in the line printers.

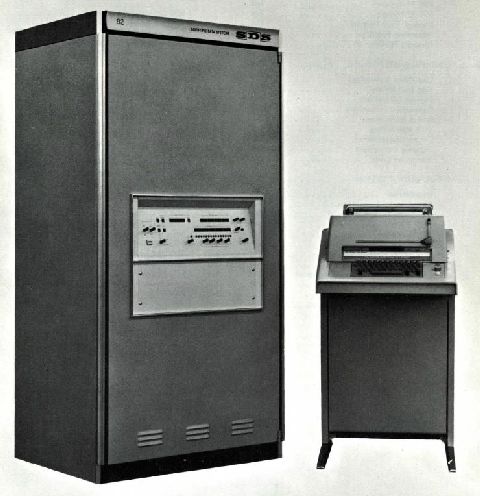

The SDS 92 computer, shown at right, was first delivered during 1965, and was advertised as "the first commercial computer to make extensive use of monolithic integrated circuits"; it was also noted that "all the flip-flops in the computer were integrated"; thus, presumably, it also contained some discrete transistors as well.

This computer had a 12-bit word; instructions were both 12 bits and 24 bits in length; its instruction set looked much more like that of an 8-bit microprocessor than the extremely simplified instruction set of the PDP-8; the instructions that accessed memory were normally two 12-bit words long, and contained 15-bit addresses, although there was also an addressing mode that accessed memory locations indirectly from instructions that were one word long.

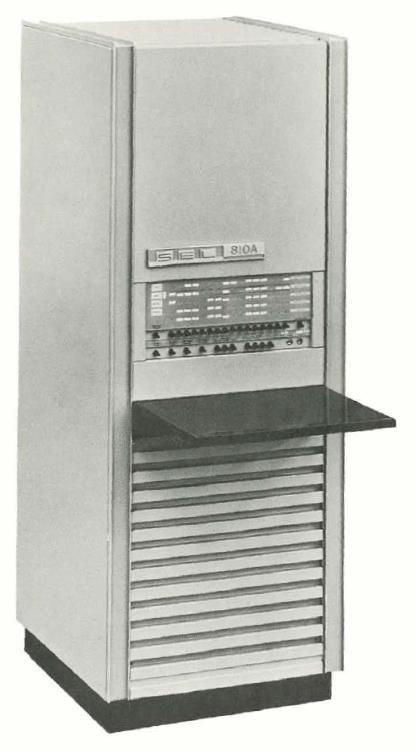

And then the 16-bit SEL 810A computer, pictured at left, came along, advertised as "the first computer to use monolithic integrated circuits throughout". The race was close enough, however, that there are other computers that might actually be candidates for that title as well.

Systems Engineering Laboratories was a company based in Florida, and a number of their sales were made to NASA for the space program; their computers were used, for example, in flight simulator training systems.

Of course, when integrated circuits became a better option than discrete transistors, pretty well all the computer companies quickly switched over. Thus, while the original PDP-8 and the PDP-8/S minicomputers were made with discrete transistors, the PDP-8/I and all subsequent models were made with integrated circuits, for example.