1984 is memorable for two advances. In that year, IBM brought out a larger model of their personal computer, the IBM Personal Computer AT, which used the more powerful 80286 processor.

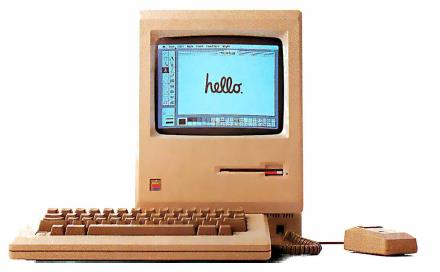

The introduction by Apple of the Macintosh computer was even more significant, as it brought about a major fundamental change in the computer industry, by making the graphical user interface (GUI) a serious option for business and home computer users, rather than a novel laboratory curiosity.

The Xerox 8010 Star was announced as a commercial product in April, 1981. Since it predated even the Apple Lisa, and it was implemented with a custom bit-slice processor, despite the potenial attractiveness of a GUI - which, of course, was still a very novel concept at that time, the value of which was yet to be generally appreciated - it was too expensive to be successful.

In the image at right, the Star is shown at the bottom right along with other components of the Xerox 8010 Information System.

The Xerox Star was preceded by the Xerox Alto. This computer was built from TTL chips, and was an internal Xerox project, not sold as a commercial product. But it was not kept secret; for example, the September, 1977 issue of Scientific American had an article, titled Microelectronics and the Personal Computer which illustrated the features of the GUI used in that computer.

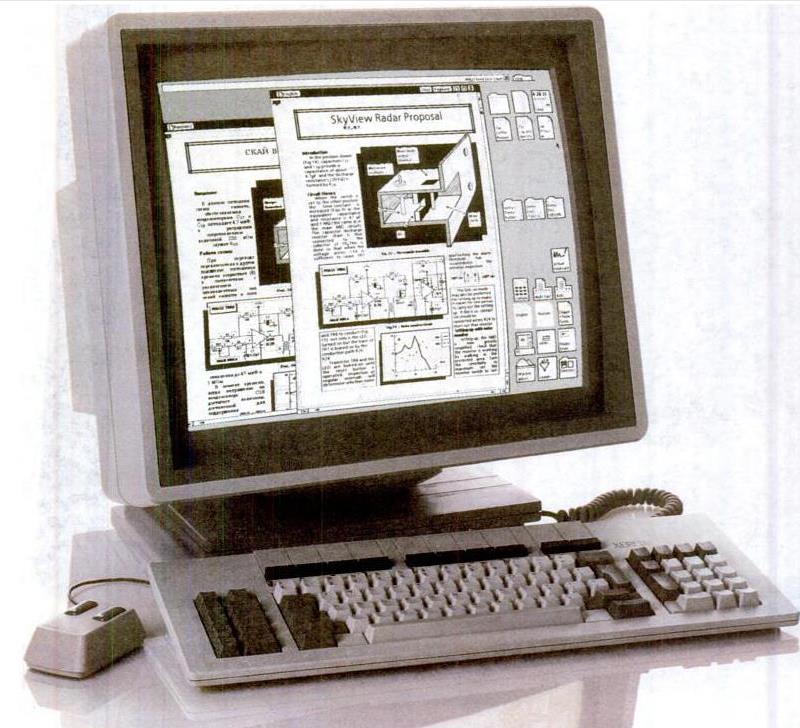

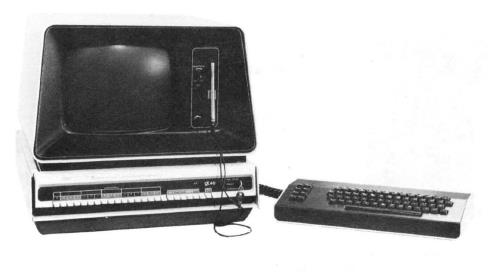

On the left is a later image, from a 1986 advertisement, of a display terminal connected to the Xerox Document System, in which the GUI is more plainly visible.

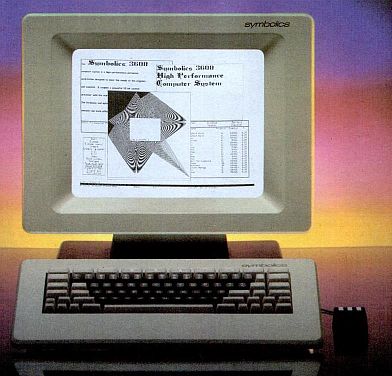

Another influential computer that provided a graphical computing environment was the LISP Machine, from Symbolics. Their first commercial product was the LM-2; this, though, had been intended to be superseded by the 3600, which did take place, although later than hoped due to delays in bringing the newer model to production. That goal, however, was reached by 1983. Pictured at right is an image of the terminal from a 3600 system from a 1983 advertisement, showing the face the system presents to the user.

From a 1986 advertisement, here are three models of the 3600 then available:

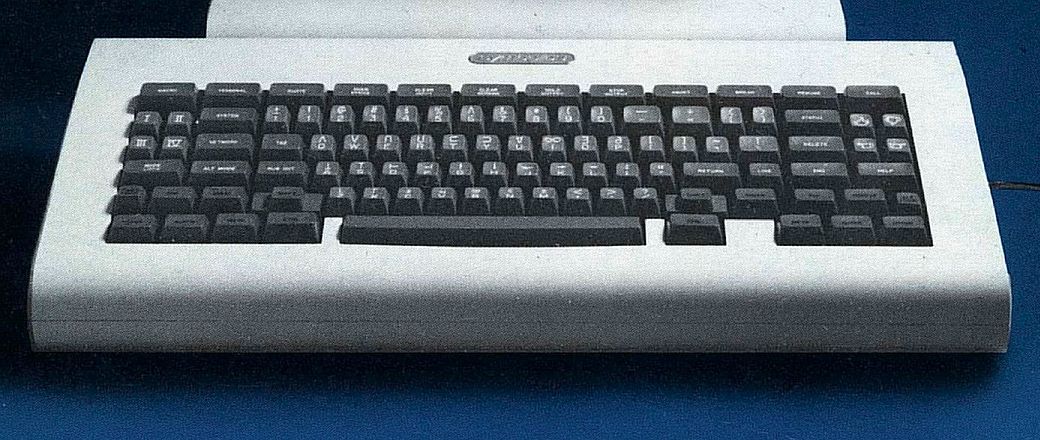

This image, from the cover of a 1982 manual for the Symbolics 3600, shows the original "Space Cadet" keyboard, as was used on the LM-2, but which apparently was not used, except possibly on some early deliveries, with the 3600:

Although from a technical standpoint, not having the extra symbols on the keys reduces the power and versatility of the system, from a marketing standpoint, it is clear that to a large proportion of potential customers, such a keyboard would be distinctly off-putting and intimidating.

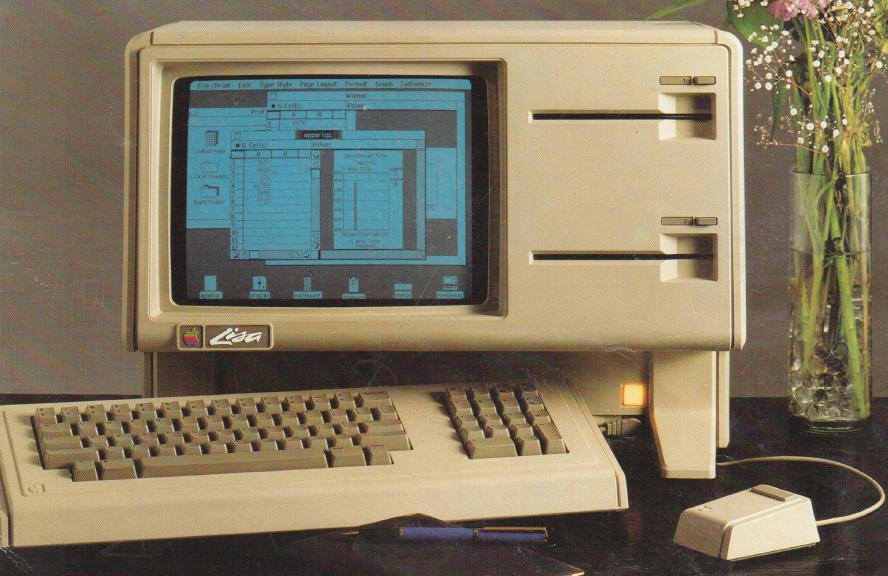

The Apple Lisa computer had been introduced in January, 1983. Like the Macintosh, it had a graphical user interface, so when the Macintosh came out, it did not come as a total shock. And, as noted above, not only was the Lisa itself preceded by the Xerox 8010 Star, but as far back as 1977, information about the Alto project within Xerox had been made public.

The Lisa is pictured below, first in its original form, and then its appearance after it was changed to use standard 3 1/2" disks is shown.

Originally, it used modified 5 1/4" disks, which had two openings through which the magnetic surface could be accessed at the sides, instead of one at the end inserted into the computer.

The Macintosh, pictured at left, however, was far less expensive, and was thus something home users could consider. Both of these computers were based on the Motorola 68000 processor, also used for early Apollo workstations, the Fortune Systems 32:16 computer, and the computers in IBM's System 9000 series, discussed above, a laboratory data collection computer from IBM, all of which were quite expensive systems.

While the original Macintosh was quite limited by the fact that it only had 128 kilobytes of memory, this was soon remedied by the Macintosh Plus, which had 512 k of RAM instead.

Motorola eventually made the 68008 chip, a version of the 68000 that had an external 8-bit bus available, but too late for it to have been used in the IBM PC.

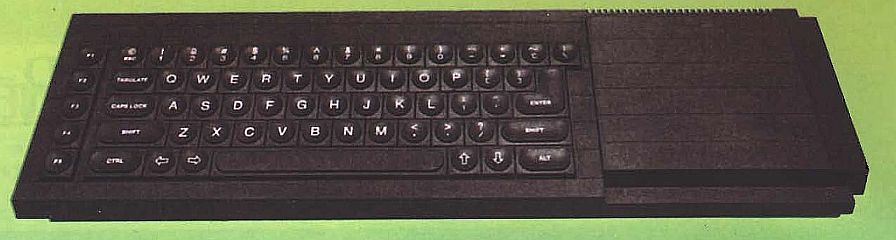

However, that was used in the Sinclair QL computer, announced on January 12, 1984.

The Sinclair QL, shown above, unlike the Macintosh, did not have a graphical user interface, although it did interact with the user by means of menus. As an inexpensive computer providing access to the power of the 68000 architecture, it was a very exciting product at the time, but its success was fatally hampered by two instances of one basic flaw. Instead of coming with floppy disk drives, it used a non-standard form of tape cartridge, and instead of offering a hard disk as an option, the option for larger storage was a solid state disk based on wafer-scale technology... which never materialized. Prospective purchasers, especially those outside of the United Kingdom, were naturally skeptical about the prospects of there being the needed support infrastructure to make the computer genuinely useful.

The Macintosh was famously announced with a television commercial that aired during the Super Bowl, which is the culminating game of American football, on January 22, 1984.

Another very important event happened in 1984: the first Phoenix BIOS became available. This enabled many companies without the resources to develop their own legal and compatible BIOS, to start making computers fully compatible with the IBM PC. Unlike the Compaq, which was sold at a premium price, these were often sold at lower prices, reflecting their new status as generic commodity products.

And soon after, the Award BIOS was available as a competitor to the Phoenix BIOS, and then came the American Megatrends BIOS, later versions of which are found on today's motherboards.

The government of the Republic of China (located on the island of Taiwan, by the name of which it is better known) even arranged to have a BIOS written for the benefit of that country's PC manufacturers, the ERSO bios, which is sometimes referred to as the DTK/ERSO bios since manufacturers of computers using it customized it with their own names, and DTK was one of the largest such manufacturers.

Thus, the floodgates were opened at this point, and many more people had the opportunity to own a computer that could run the same software as the IBM PC. The cover of the October 14, 1986 issue of PC Magazine, shown at right, illustrates the situation that eventually arose, if with some exaggeration for effect.

Another notable computer introduction from 1984 was that of the IBM PCjr. This was an attempt by IBM to make a lower-priced computer that would address the home market. It came with PC-DOS 2.1 which included some modifications to support this computer.

It had two cartridge ports below the standard 5 1/4" floppy disk drive in the case.

Naturally, it had some limitations of expandability compared to the original IBM PC. This would soon become a very good reason not to buy one, when the inexpensive imitations of the full IBM PC started to come out. But one reason advanced for not considering this computer was specious.

The photo shows the original keyboard of the IBM PCjr, with push-button keys. This was condemned as not being a "real" keyboard, and the example of the original Commodore PET was often cited.

The outcry was so loud that IBM eventually provided all the original purchasers of the computer with a revised keyboard with full size keys.

Computer columnists praised IBM for this remedial action, and praised the new keyboard for its improved feel.

I remember comparing the two keyboards in an IBM storefront at the time, and in my opinion, the tactile characteristics of the two keyboards were identical. Unlike the keyboard of the original Commodore PET, the original IBM PCjr had keys which had the same spacing and position as those of any other normal typewriter-style keyboard.

So, yes, you could touch-type on the original IBM PCjr keyboard.

Even so, why did IBM risk what ended up happening by not making the keyboard of the IBM PCjr conventional in every way? Was it just to keep it out of offices, so that it wouldn't compete with the more expensive real thing?

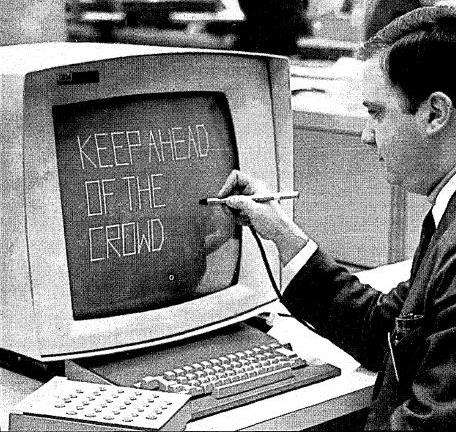

No. There was a reason behind the keyboard of the IBM PCjr, a reason which drew inspiration from a much more expensive IBM product, the IBM 2250 graphics terminal. The photograph at left of one example of that item came originally from an advertisement for Sikorsky Aviation, a maker of transport helicopters.

This is a type of graphics terminal that allowed moving graphic displays to be provided with relatively modest amounts of computer power. It had a cathode ray tube as its display element, but that tube worked like the CRT in an oscilliscope rather than the CRT in a television set. That is, instead of the electron beam in the tube tracing out a raster, a pattern of lines that covered the whole screen, with images being placed on the screen by varying the intensity of the electron beam, and hence the brightness of the current point on the screen, over time, instead the beam was directed to move over the screen along the lines of the image to be drawn, just as a pen would move on a sheet of paper when drawing an outline picture.

Terminals of this general type were usually provided with light pens. This input device served to indicate a point on the screen; they contained a lens and a photodiode or other device sensitive to light, and so a point on the screen was indicated by an electrical pulse coming from the light pen when the electron beam was at that point on the screen.

Light pens could also be used with raster displays; in the case of a vector display, to use a light pen to indicate a point in the blank part of the screen required the terminal to draw a cursor on the screen that followed the light pen from some starting point on which there was always something drawn.

Note, in the lower left corner of the image, to the left of a conventional terminal keyboard in the typewriter style, a square keyboard of round buttons, with 32 buttons in a 6 by 6 array with the corners omitted.

There was plenty of space between the buttons for a reason: so that a sheet of cardboard or plastic with holes cut out for the keys could have legends written or printed on it, so that the keyboard overlay could be used with programs that made use of the display. The top of such a sheet could have notches to indicate which sheet was placed on the keyboard, thus allowing it to be used to choose between many more than 32 possibilities.

While the PCjr didn't do the trick with the notches, overlays for its keyboard were made, so that PCjr software could make use of customized keyboard arrangements easily.

The IBM 2250 came in several forms.

The Model 1 was intended to be used with a dedicated IBM System/360 computer; it had the basic operator control panel, from which that System/360 computer could be started up or shut down built right in to it.

The Model 2 and Model 3 were controlled by an IBM 2840 Display Control - Model 1 of that display control for the Model 2 of the 2250, and Model 2 of that display control for the Model 3 of the 2250.

The Model 4 of the 2250 display was attached to an IBM 1130 computer, and the IBM 1130 could be used by itself for computing tasks involving the display, or it could be used to give the terminal more local processing power while it was connected to an IBM System/360 computer.

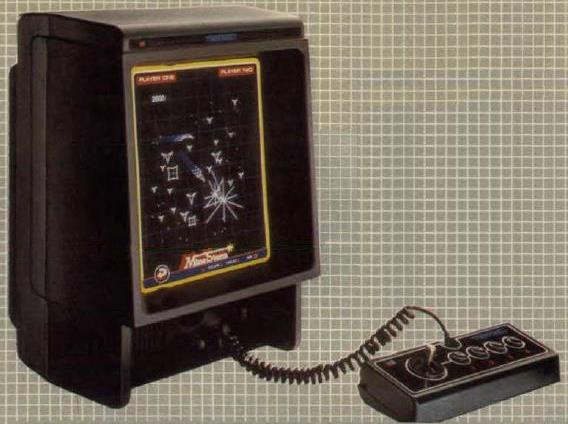

Control Data, the Digital Equipment Corporation, and Xerox also made terminals of the same general type as the IBM 2250. Much later, the Vectrex home video game, pictured at right, made use of the same principle.

Pictured below is Control Data's 240 series graphics terminal subsystem, also known as GRID, for Graphic Remote Integrated Display.

This image is from an advertising brochure by Control Data for the terminal. There is a high-quality color image on the Internet of what appears to be the same model, working with a light pen on an image of a globe on the screen. That picture even appears on the front cover of the book The Power of Go Tools for some reason; presumably, the language Go is well suited to working with graphics, even if this isn't the hardware it would run on.

The internal processor in this terminal, in the cabinet on the woman's right, is a processor with the same instruction set as the Control Data 160 computer.

The GT40 interactive display from the Digital Equipment Corporation used a smaller model of the PDP-11 to provide it with processing power; an image is shown below.

The interactive graphics terminals, and one video game, shown above benefit from the versatility of the CRT; it need not be used only as a raster display, but instead the electron beam can be deflected in the directions required to trace out outlines directly. There's no reason this can't be done inexpensively by a conventional magnetic deflection yoke, as found in a TV set, instead of by electrostatic deflection plates, as typically used for higher precision in oscilloscope displays.

But that is only one aspect of the versatility that the CRT offered. Another thing one can do with a CRT is choose different phosphors to use.

Thus, pictured above is a Tektronix 4006 graphics terminal, between a tape drive and a hardcopy unit.

This was a less expensive successor to the very popular Tektronix 4010 graphics terminal, and worked on the same principle.

Here, as in the types of vector graphic displays discussed above, the electron beam directly trace out the outlines of what was to be drawn. However, instead of continuously redrawing those outlines, so that they could be seen, the outlines only needed to be drawn once. That's because the CRT was a storage tube, similar to the kind once used as a computer memory (the Williams Tube). In fact, the printer unit shown on the right of this image above functions by means of the terminal reading out the pattern of illuminated areas in the storage tube used for display, so the storage tube in the terminal did have that aspect of the functionality of a Williams Tube.

What's the difference, then? The type of CRT used in a Tektronix 4010 terminal and other similar devices is known as a Direct-View Storage Tube. A storage tube used as a computer memory did not need to be usable as a display, and so there could be an opaque metal sheet in front of the phosphors as part of a capacitative element in the tube. But even if that was the case, the tube could be constructed with a window in the back through which the phosphor could be seen; this would be indirect view as distinct from direct view.

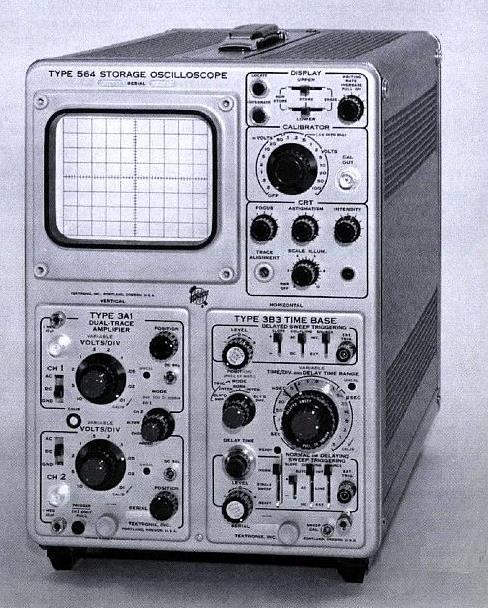

Direct-view storage tubes were in existence at the time Williams Tubes were used for storage, but this technology was significantly refined at Tektronix. Originally, it was used in special-purpose oscilloscopes, such as the model 564 oscilloscope pictured at left.

A modern design, of course, would simply use memory in the terminal to replay the characters instructing the terminal to draw an image, and the printer would then draw the image again; and, indeed, many raster graphics terminals emulated the Tektronix 4010 terminal, accepting the same commands to draw an image, but presenting the image at a somewhat lower resolution.

Other choices are possible. A high-resolution color display, for use in such applications as air traffic control, might use a beam penetration CRT; this would provide a limited gamut of colors by using two phosphors, applied in layers without a shadow mask or other similar provision within the CRT. Instead, the voltage difference between the cathode and the screen would be varied to determine if the green phosphor, or the red phosphor, or both for the display of yellow lines, would be excited by the electron beam.

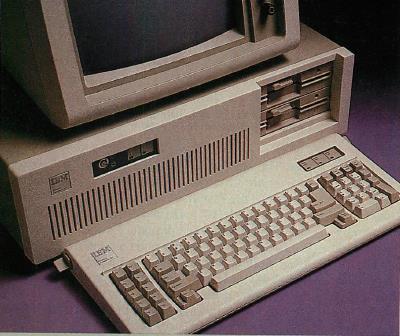

Although the introduction of the Macintosh did bring about a more fundamental change to the computing scene than that of the IBM Personal Computer AT, since computer chips were getting more powerful from the start of the microcomputer era onwards, this computer, pictured at right, was still a very important milestone in microcomputer history.

The original IBM PC was based on the Intel 8088 chip, which, although it was a 16-bit chip internally, had an 8-bit external data bus. The 80286, however, like the 8086, had a full 16-bit data bus. Therefore, the slots in the IBM PC AT were designed for a new bus, but this bus was a compatible superset of the bus in the original IBM PC, so that older peripheral cards could still work in the AT.

Also, the keyboard of the AT was revised. As there were complaints about the placement of the | and \ key between the Z key and the left-hand shift key, this was changed on the AT; the arrangement was still not what I would consider ideal, as now there was a key between the + and = key and the back space key.

Another innovation of the IBM Personal Computer AT was the introduction of new, higher-density 5¼-inch floppy disks that could store 1.2 megabytes of data on them rather than only 360 kilobytes of data.

Later computers, based on the Intel 80386 chip, were able to use the same bus as the IBM Personal Computer AT, so its basic design remained an industry standard for some time.

The May and June 1984 issues of BYTE Magazine contained a series of articles by Steve Ciarcia on the construction of an add-in card for the IBM PC which was itself a microcomputer based on the Zilog Z8000 chip. This is another of the very limited possible ways one could use a Zilog Z8000 processor for computing.

The parts for assembling the board, or assembled Trump Cards, were available from Sweet Micro Systems, most famous for another of their products, the Mockingboard, a sound card for the Apple II computer with speech synthesis capabilities.

I haven't shown too many images of computers belonging to another very important category, the graphics workstation. On the right is an image of an Apollo DN300 computer; it used the DOMAIN operating system, and was based on a RISC chip; earlier Apollo workstations used the 68000 processor.

Also, 1984 saw one of the last exciting developments in the field of 8-bit computing.

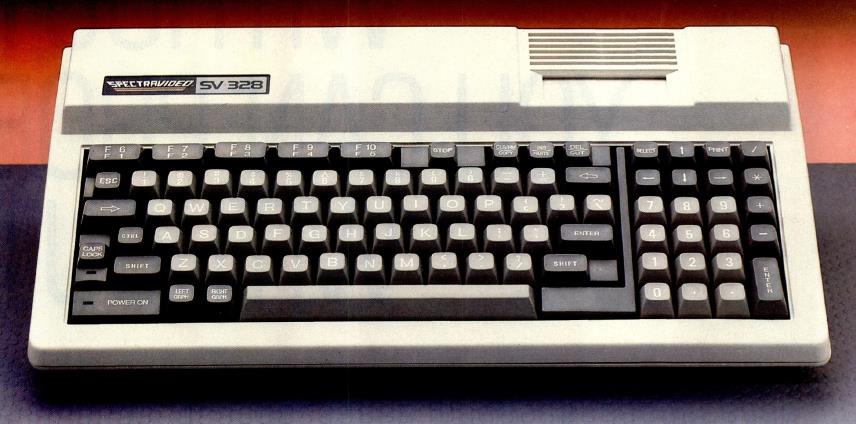

Spectravideo came out with a pair of computers with both upper and lower case on their keyboards, still something of a rarity; below is pictured the more expensive model with a keyboard made of regular keys instead of buttons, the SV-328:

The keyboard has a very nice arrangement, and this computer inspired a Microsoft standard for 8-bit computers called MSX. (The Spectravideo, however, wasn't fully conformant with the standard as it was established.)

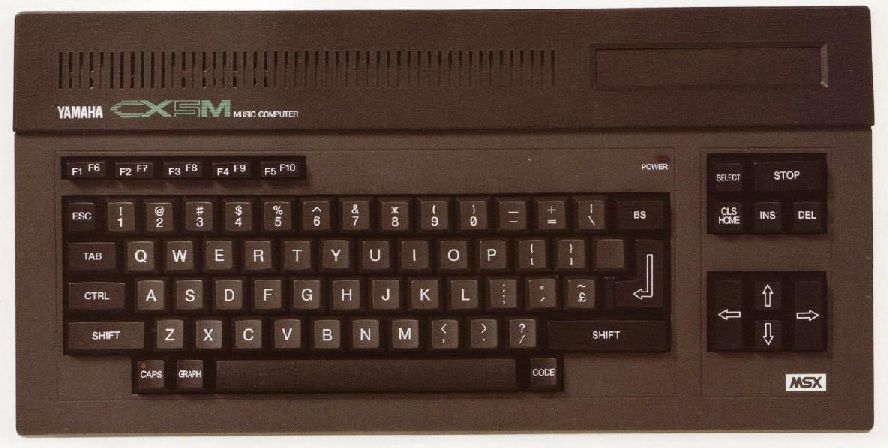

The MSX standard was most significant in the Japanese market, but some MSX systems were sold worldwide. One MSX computer that was memorable was the Yamaha CX5M, since it included a sound chip with capabilities similar to those of its popular FM-based synthesizers; it is pictured below:

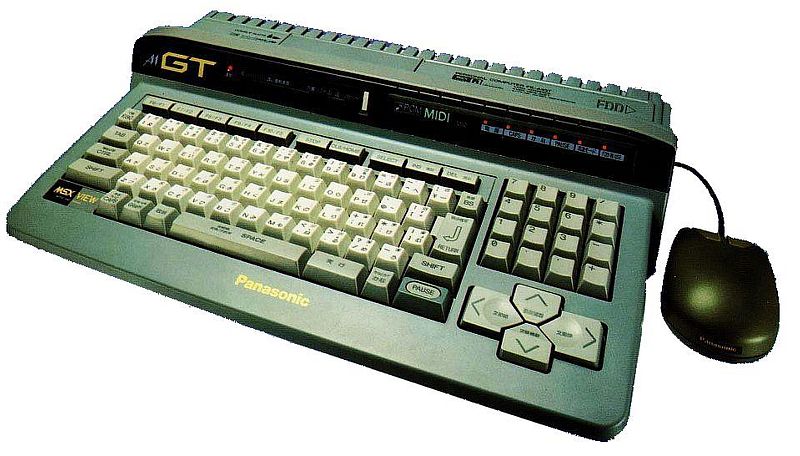

Later on, an extension of the MSX standard known as MSX 2 was defined. Machines made to this specification ended up only being sold in Japan. Panasonic was the last company to make MSX computers, and it ended up being the only company to make computers that followed the final extension to the MSX standard, the 16-bit MSX TurboR design.

Pictured above is the Panasonic FS-A1GT, the larger of the two MSX TurboR computers made by Panasonic. Those computers were introduced in 1991. They were based on the R800 chip made by a Japanese chipmaker with the confusing name of ASCII, a 16-bit chip that also could execute Z80 code, so as to allow full upwards compatibility with programs for the original MSX computers. The original Z80 chip had a 4-bit internal ALU instead of an 8-bit one, as one might expect, but the R800 had a full 16-bit ALU.

This is, of course, somewhat reminiscent of the Apple IIgs.

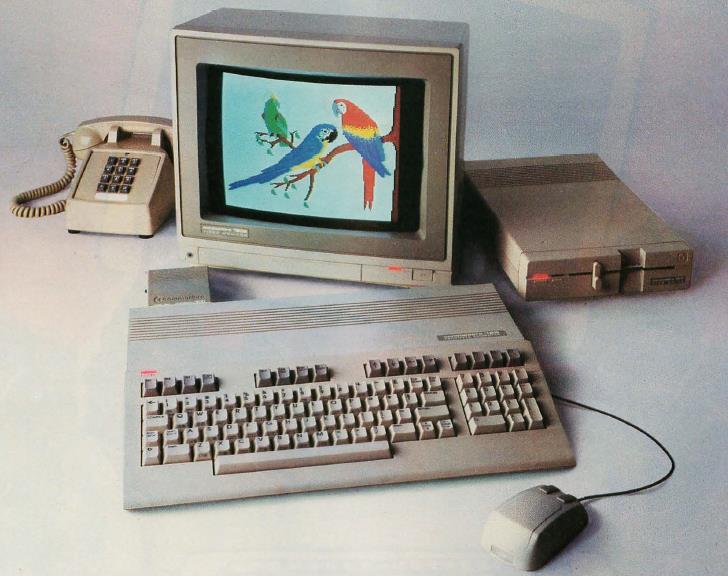

Actually, though, January 1985 also was the month of another exciting development in the world of 8-bit computing. That was when Commodore announced the Commodore 128, shown below. This was a compatible successor to the Commodore 64 which also included a Z80 processor in addition to 128k instead of 64k of memory. A version of CP/M for this computer could be purchased separately. And, if one used a monitor, the computer did have an 80-column text output, so there wasn't a compatibility issue similar to that of the 56-column Osborne I. However, the use of a disk drive derived from the 1541 disk drive design would have impaired performance compared with more typical CP/M systems.