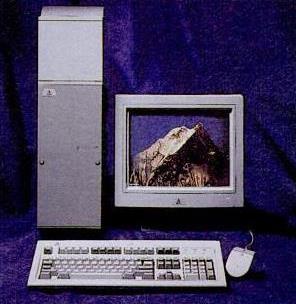

In the year 1988, Ardent brought their Titan supercomputer to market. This system is pictured at right. It was built from MIPS 2000 microprocessor chips, which gave it a conventional instruction set, plus custom circuitry that performed all the floating-point arithmetic, and also allowed it to function as a vector supercomputer, resembling the Cray I and its successors, at a much lower price.

On the left, from a 1988 advertisement, is an IBM RT workstation. This appears to have used the multi-chip processor which preceded the PowerPC line of single-chip microprocessors; there was a choice of a 170 nanosecond or 100 nanosecond cycle time (5.88 or 10 MHz) and floating-point chores were handled by a Motorola 68881 math coprocessor.

In 1989, Apple responded to popular demand by bringing out the Mac Portable computer. This computer was somewhat large for a laptop computer. It used sealed lead-acid batteries from Gates to operate when not plugged in, rather than the more common nickel-cadmium batteries. Presumably, this was because it had heavier current demands from being largely based on the design of the desktop Macintosh.

As it was expensive, it had limited success in the market.

Below is illustrated a workstation from DEC, the DECstation 2100, from 1989. This RISC workstation was based on the MIPS chip, as DEC's own RISC chip, the Alpha, would only be introduced later, in 1991, and DEC would introduce a line of Alpha-based workstations in 1993.

|

The image above is from Wikimedia Commons, licensed under the Creative Commons Attribution Share-Alike 3.0 Unported License, and is thus available for your use under the same terms. Its author is Simon Owen. |

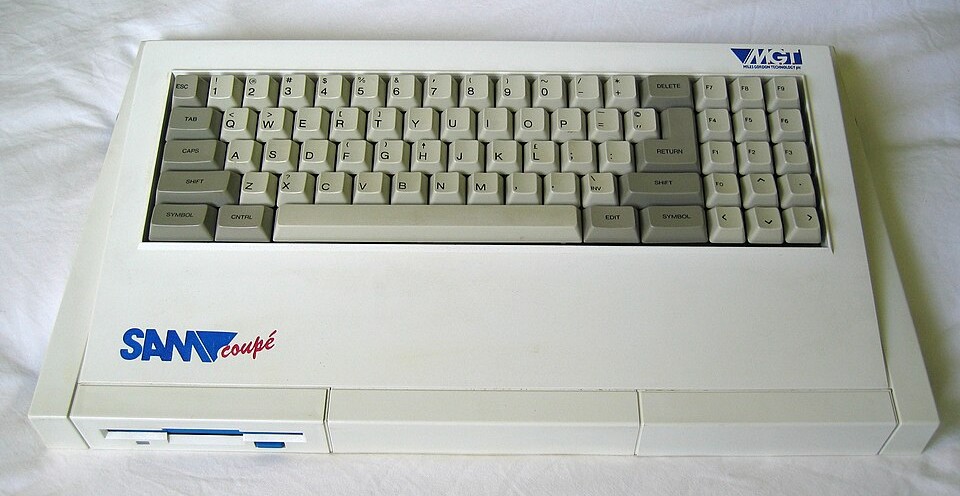

In late 1989, a new 8-bit microcomputer was offered for sale to British home computer enthusiasts. This computer, the SAM coupé, was intended as a successor to the Sinclair Spectrum. It had been intended to bring this computer out much earlier, but its development had met with a number of delays. Sadly, the company that made it, Mark Gordon Technologies (MGT) failed in 1990.

Aside from improvements in sound and graphics, one of the notable features of this computer was an implementation of MIDI, just as was offered by the Atari ST.

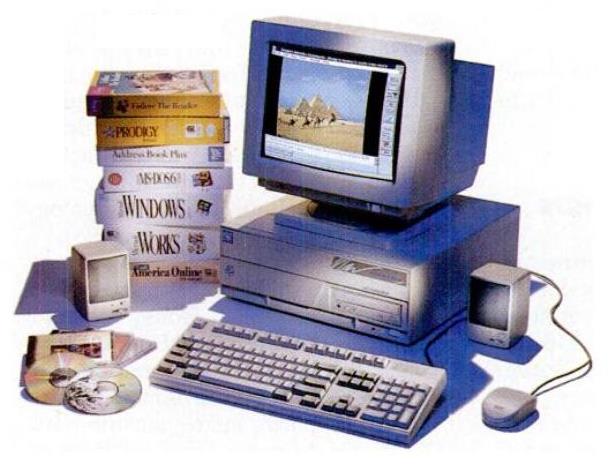

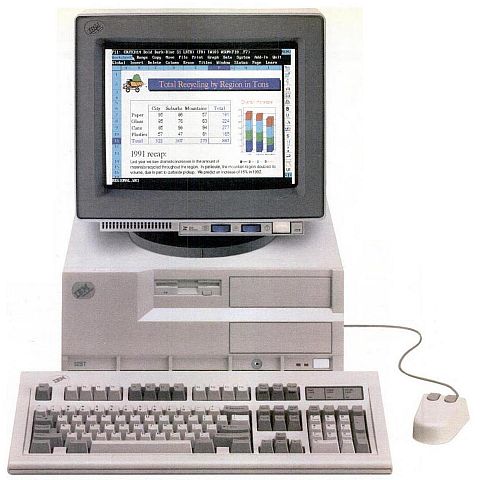

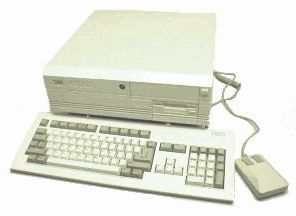

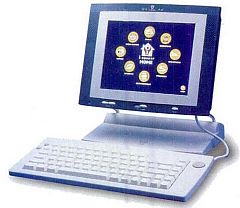

In 1990, IBM came out with the Personal System/1 line of computers. One member of that line was an all-in-one computer which had a configuration reminiscent of the original Macintosh, even if it looked quite different with IBM styling. Pictured at left is another member of that lineup, with a conventional desktop form factor, along with the software that came with it. Note that the keyboard is not a Model M, but is designed to save space around the edges.

As the PS/1 was aimed at the home market, it is sometimes referred to as the successor to the PCjr. The PCjr, however, while essentially compatible with the IBM PC, was still limited in a number of ways compared to the IBM PC, being considerably stripped down to permit it to be made at a lower cost, while the systems in the PS/1 lineup were genuine PC-compatible computers.

In April, 1992, Microsoft offered version 3.1 of their Microsoft Windows software to the world. This allowed people to use their existing 80386-based computers compatible with the standard set by the IBM Personal Computer to enjoy a graphical user interface similar to that of the Macintosh, if not quite as elegant, at a far lower price. One major advance over version 3.0 was the addition of support for the TrueType font standard, licensed from Apple.

There was a Microsoft Windows 1.0, and there were a Microsoft Windows 2.0, and a 3.0 as well, of course. The first version of Microsoft Windows required all the windows that were open to be tiled on the screen, rather than allowing overlapping windows as on the Macintosh and the early Xerox machines that pioneered the GUI, and this was generally seen as a serious limitation by reviewers at the time. Windows 3.0 was promoted by an arrangement that allowed Logitech to include a free copy with every mouse that they sold. (When Windows 3.1 came out, Logitech actually sued Microsoft, because it had decided that Windows 3.1 was good enough that it no longer needed to be promoted in that way. Needless to say, their suit was unsuccessful in the courts.)

It was Windows 3.1, however, that enjoyed the major success that led to Windows continuing the dominance previously enjoyed by MS-DOS. The major factor usually credited for this is that Windows 3.1 was the first version to include TrueType, a technology licensed from Apple, thus allowing it to be used for preparing attractive documents on laser printers in a convenient fashion, with the ability to see the fonts being used on the computer's screen, just as had been possible on the Macintosh.

Except for TrueType, Windows 3.0 (May 22, 1990) already offered most of the features of Windows 3.1 that made it reasonably useful. And it included a Reversi game, which was dropped from Windows 3.1. It was with Windows 2.1 (May 27, 1988), which was distributed both as Windows/286 and Windows/386, that, in its Windows/386 form, that some of the important features of Microsoft Windows that the Intel 80386 architecture first made possible were first introduced.

A brief note on digital vector fonts might be in order here.

Apple developed the TrueType format, which allowed the curved portions of character outlines to be represented by quadratic spline curves, as an alternative to licensing a digital font format that was already in existence and wide use at the time, Adobe's Type 1 fonts, which used Bezier curves.

The Adobe Type 1 font format, however, was not the first digital font format in existence. One which preceded it was the Ikarus font format, developed by Peter Karow. This format is still supported by the program IkarusMaster within the FontMaster font utilities package from Dutch Type Library (DTL).

And Donald Knuth devised METAFONT, which instead of describing characters in terms of outlines, described a center line to be drawn with an imaginary pen nib which was also described. This accompanied this TeX typesetting program project.

But the granddaddy of all the electronic outline font formats was devised by Peter Purdy and Ronald McIntosh back in the 1960s for the Linofilm electronic CRT typesetter. This format used Archimedian spirals instead of Bézier curves, and thus was less sophisticated than what would come later. This is because the Archimedian spiral was an obvious and mathematically simple line of varying curvature that could substitute for the draftsman's French curve.

Windows 3.1 was a very important milestone for computers, as it made it possible for many people to make use of the GUI interface on the computer they already had, at the price of buying a software program rather than an entirely new computer. Not every IBM PC-compatible computer out there at the time, of course, was capable of running Windows, but having a GUI available on the most popular platform was still very important.

Netscape Navigator 1.0 became available in November, 1994. Netscape was the first popular web browser, being preceded only by such browsers as Mosaic and Viola. This could be considered as the event that paved the way for today's computer scene, where people don't get computers only if they need to use a word processor or spreadsheet, but instead primarily have computers so that they're able to surf the Web.

On this page, I've focused on the dramatic early days of the microcomputer, when many different companies made their own incompatible computers. Particularly after Windows 3.1 made an adequate GUI available for users of PC-compatible computers (with a video card, and a processor, more advanced, of course, than what the original PC offered), so that the Macintosh wasn't the only alternative if you wanted a GUI, the market largely settled down to multiple makers of similar "clone" PCs, with the only real competition between Intel and the few other chipmakers that were licensed to make x86 processors, such as Cyrix at one time, and AMD today.

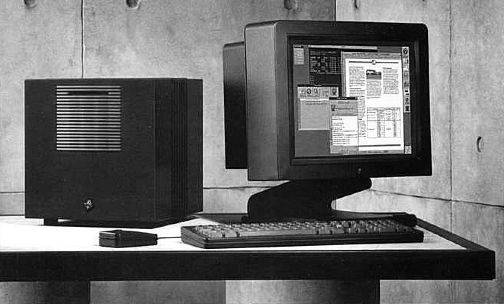

But the Macintosh still retained a presence. For a time, Steve Jobs parted ways with Apple, and for a time he was offering his own new computer, the NeXT, which was built around BSD Unix. That computer had a monochrome high-resolution display, on which four gray-scale levels were available, and is pictured below.

Initially, the NeXTcube shipped with a copy of Mathematica, which definitely tempted me to run out and buy one if I could have afforded it!

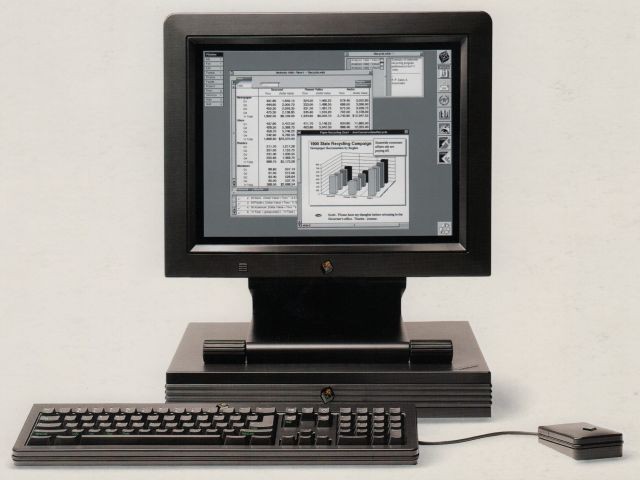

The NeXTcube was succeeded by the NeXTstation, pictured at left, in September of 1990. The NeXTstation used a 68040 CPU running at 25 MHz, and for a slightly higher price, one could get the NeXTstation Turbo at 33 MHz. Along with the standard NeXTstation, there was also the NeXTstation Color, which offered a 12-bit color display.

It might be noted that the NeXT was introduced in 1988. It was not until 1992 that Windows 3.1 was introduced, and not even until 1990 that Windows 3.0 was introduced; at the time, the version of Windows available was Windows/386, which had not yet achieved massive popularity. The Amiga was still a popular and viable platform. So, while the computers descended from the IBM PC were definitely dominant, this dominance had not yet reached the absolute nature that it has today.

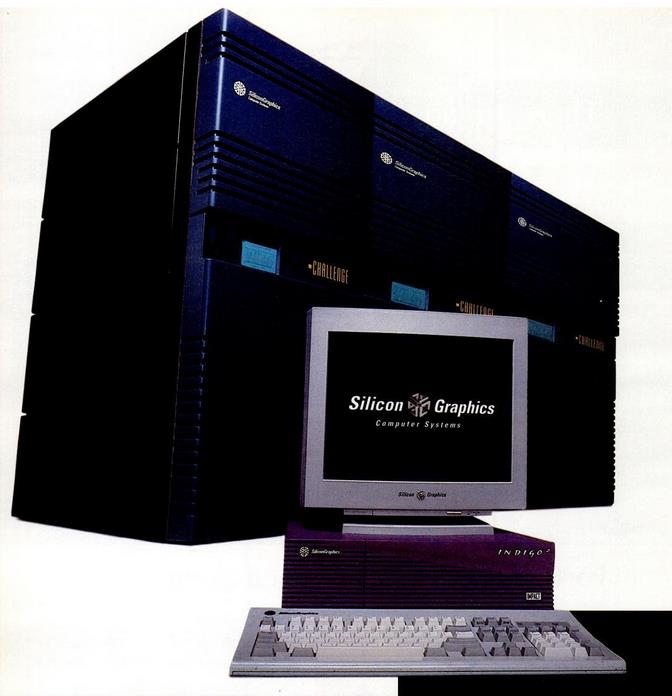

Below is an image of two powerful computers offered in 1992 by Silicon Graphics: the Indigo 2 workstation, and the Challenge server.

The Indigo 2 was available with a range of MIPS processors, three speeds of the R4000, or an R4400. Later related models used the R8000 or the R10000. These processors were used in the Challenge servers (or supercomputers) as well.

These computers ran IRIX, Silicon Graphics' licensed version of UNIX.

It wasn't just specialized companies like Sun and Apollo that made powerful workstation computers. Major computer manufacturers also entered this market; for example, IBM made workstations based on PowerPC chips.

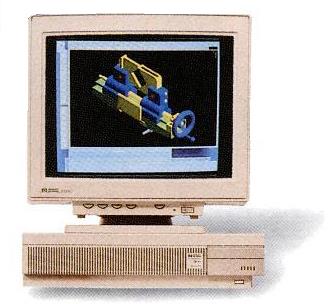

The Model 735 Apollo workstation pictured at left, also from 1992, used the PA-RISC microprocessor, Apollo having been acquired by Hewlett-Packard.

In September, 1992, IBM announced the ValuePoint series of computers. A model from that series is pictured at right.

In 1993 (at least in the United States domestic market; it began a year earlier in Europe) a line of computers was introduced by a company named Ambra, billed as An IBM Corporation. This was IBM's way of making a line of computers that would attempt to compete with the low prices of clone systems while distancing that from their brand.

These computers encompassed a range of performance; at left is pictured the top-of-the-line model, which could optionally come with two Pentium processors.

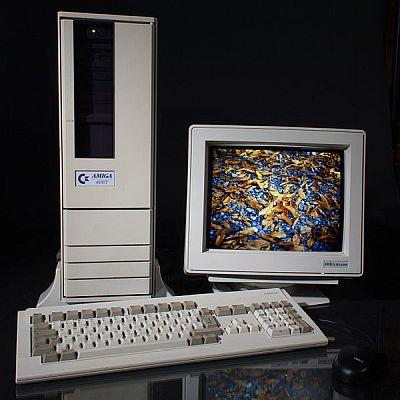

The last version of the Commodore Amiga to be released was the Amiga 4000. Initially, it was a desktop machine with a 68040 CPU, pictured at left, released on October 1992; later, starting in April 1993, getting an Amiga 4000 with a 68030 CPU became an option, and, as well, a tower version, pictured at right became available.

The image of the tower version of the Amiga 4000 was graciously released into the public domain by Kaiiv through Wikipedia (which I have slightly retouched). This version had extra ports as well as one extra expansion slot. As well, the image of the desktop version was also graciously released into the public domain through Wikipedia, by Crb136.

As the A 4000 T was the best possible Amiga, despite continuing to be manufactured by Escom after the departure of Commodore, it is now a rare collector item sold at premium prices.

On March 14, 1994, Apple announced the first Macintosh computers which used microprocessors based on the IBM PowerPC architecture instead of 680x0 microprocessors.

IBM introduced the POWER architecture with its RISCSystem/6000 from 1990. An alliance between Apple, Motorola, and IBM was formed in 1991 to make single-chip microprocessors based on an expanded version of this architecture generally available as a successor to the 680x0 architecture.

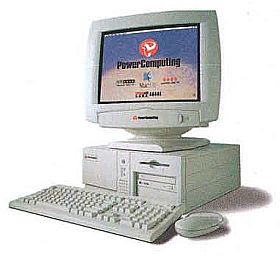

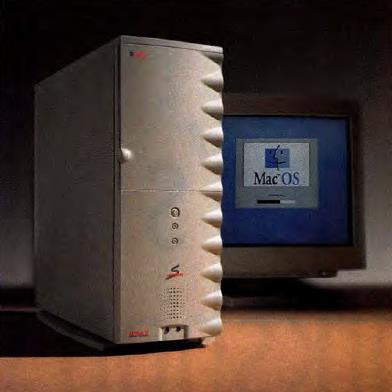

Another important event in Macintosh history was the short-lived Macintosh OS 7 licensing program. From early 1995 to mid-1997, other companies were permitted to license the ROMs of the PowerMAC and Macintosh OS 7, to ship those with compatible systems they manufactured.

Two of the companies which took advantage of this offer were PowerComputing and UMAX. An early system by PowerComputing, possibly the Power 100, is shown at left, and the Supermac S900 (the brand Supermac was obtained from Radius, a maker of Macintosh-compatible periperals, for the United States; in other countries, UMAX used the brand name Pulsar for this series of computer) is shown at right.

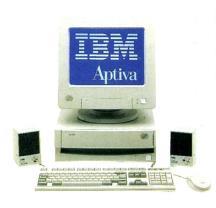

On September, 1994, IBM announced the Aptiva; this line of computers replaced the PS/1, but included models with a wide range of performance levels despite being aimed at the home market. One model is pictured at left.

In 1988, 2.88 megabyte floppy disks became available. Not many computers used them, but the magnetic media used had the capacity to store much more than 2.88 megabytes of data, with the accuracy with which it was possible to position the read and write heads of a floppy drive being the limiting factor.

As a result, a class of disk drive known as the "floptical" drive was developed, where one surface of a disk was coated in the same way as the disk in a 2.88 megabyte disk, and the other surface had a printed pattern on it for an optical sensor which would guide the magnetic read and write heads for the other side of the disk.

The most well-known product in this category was the Zip drive by Iomega, pictured at left, which was introduced in late 1994.

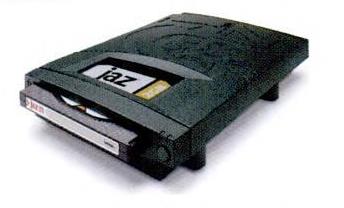

A year later, Iomega brought out a removable hard drive, called the Jaz, pictured at right.

Pictured below is the Dell Dimension XPS Pro150 computer, as shown in an advertisement.

This computer, from early 1996, was their first computer to use Intel's new Pentium Pro microprocessor. As the name indicates, it ran at 150 MHz, but more importantly, its floating-point pipeline featured out-of-order execution. As well, the Pentium Pro microprocessor included 256 kilobytes of cache in the processor module; it was on a separate die, but connected directly to the CPU die.

It also used the advanced SRT division algorithm; although this was not quite as fast as Newton-Raphson division or Goldschmidt division, it was still a significant improvement on the ordinary division method.

Thus, the Pentium Pro was architecturally reminiscent of the IBM System/360 Model 195 computer from 1969. However, it was plagued by one significant limitation: while it ran newer 32-bit software very well, its performance on older 16-bit software, which was very common at the time, was poor. This was corrected on the Pentium II processor, which had the same basic architecture, but a slower connection to its cache memory.

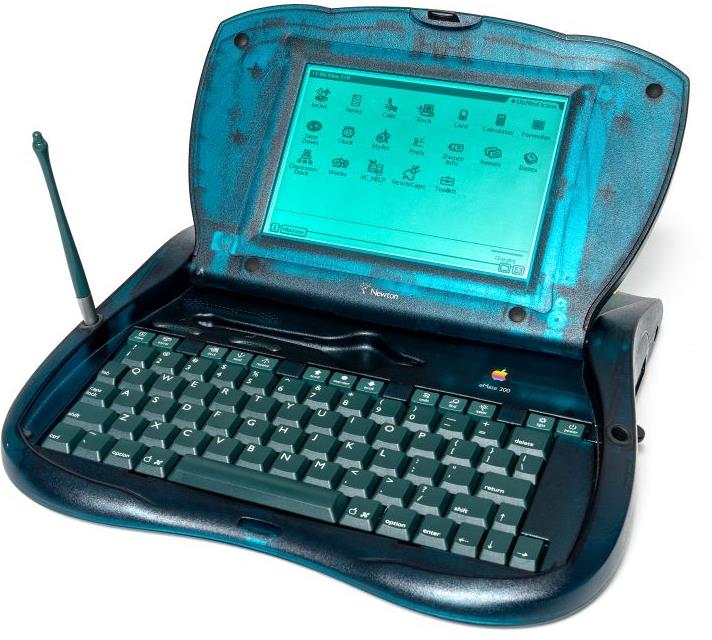

In March, 1997, Apple released the eMate 300, a product which was definitely unique. It used an ARM processor, and was based on the technology of the Apple Newton, but it had a laptop-like form factor, with a keyboard, and so unlike the Newton, did not have to rely on handwriting recognition.

|

The image above is from Wikimedia Commons, licensed under the Creative Commons Attribution Share-Alike 4.0 International License, and is thus available for your use under the same terms. Its author is Felix Winkelnkemper. |

The limitations imposed by its use of a personal organizer's operating system were justly felt not to be problems, but indeed assets, to its intended use in the education market.

Steve Jobs, though, was not a fan of the product, and so it ended up being pulled before it really had a chance to succeed or fail on its merits. I'm not particularly critical of him for this, as I'm not a fan of products like this either.

It is reminiscent of a couple of other quasi-laptops that came along later.

There was the One Laptop Per Child project, which succeeded in making a laptop at a price of around $200, but failed to seriously address how it would fit in to addressing the needs of developing countries.

And, of course, there is the Chromebook, which, much to my astonishment, continues to exist as a successful product category. Actually, though, if I remember correctly, it's now possible to download and save applications on a Chromebook's local storage, fixing the limitation of the initial version that made me very skeptical of it, so why wouldn't a cheaper computer that lets you get away from Windows be successful?

June 1998 was the month in which the storied Digital Equipment Corporation was acquired by Compaq. Compaq itself would later be acquired by Hewlett-Packard in May, 2002.

At the time Steve Jobs returned to Apple, its market share had sunk to a perilously low level. While he brought about many substantive new features to the Macintosh over time, since something was needed quickly to revive interest, he began with something that many would regard as trivial: a new Macintosh with different visual styling.

Of course, it is the original iMac to which I refer. The initial model, pictured at left, was in a color called "Bondi Blue", after the waters off of Bondi Beach in Australia. It was released on August 15, 1998.

Shortly after, the iMac was available in five colors, Orange, Lime, Strawberry, Blueberry, and Grape, shown in the image at right.

This bought Apple time, and saved it from the brink, but it invited a degree of derision from the PC camp.

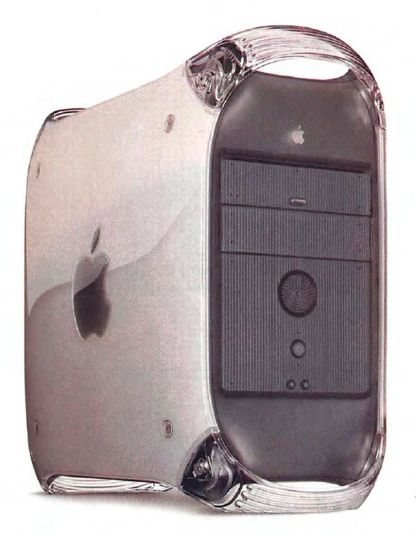

Very shortly thereafter, however, in late 1999 one model of the Macintosh computer turned out to be something to take very seriously indeed. The PowerMac G4 is pictured at left. Its processor was the first to exceed one gigaflop, and this meant that it came under export restrictions. Apple took note of this in its advertising, and referred to the computer as a "personal supercomputer". However, this was partly due to the fact that the export restrictions at the time were outdated, and they would soon be revised to avoid applying to microcomputers widely available to ordinary consumers.

The very first iMac used a 233 MHz PowerPC 750 processor, manufactured by both Motorola and IBM, and was thus designated a G3 system by Apple; later versions of the CRT-based iMac used faster versions of the processor. Apple continued to use the iMac brand name for LCD all-in-one computers using Intel x86 processors, and now at the present day uses it for computers using their own ARM chips. It had been necessary for Apple to switch from the Motorola 68000 architecture to the Power PC when Motorola discontinued development of that architecture in 1994.

The last version of the Atari ST was released in 1992, and discontinued very shortly after its release; and Commodore went bankrupt as the result of the failure of their CD32 computer, a more compact version of the Amiga, in 1994. Thus, the demise of the 68000, although it complicated (but did not completely prevent) attempts by enthusiasts to revive the Amiga architecture, was not to blame for the demise of these alternatives in the market.

Of course, as far back as the days of the original Fat Mac, when you couldn't just easily and cheaply buy a 128K Mac, and then later add memory to bring it up to 512K, and as recently as 2019, where the only Macintosh, the Macintosh Pro, that you could open up and put peripheral boards in was the top-of-the-line one at a price of $5,999, the fact that the Macintosh wasn't a computer you could upgrade yourself outside of very narrow limits, and sold at a significant price premium, left typical PC users scratching their heads.

The closed and restrictive nature of the App Store for the iPhone and iPad, and the appearance that Apple was emphasizing those products, and moving away from the Macintosh, also did not help matters.

There were and are people who are devoted to Apple products, and find PC-derived computers and Android smartphones to be far inferior. But Apple's products seem to be niche products, rather than being for everyone; budget-conscious consumers on the one hand, and technically-oriented enthusiasts who want to have control and freedom on the other both have reason to be less than enthused over Apple's products.

And, yet, how can one offer a premium-quality computing experience without doing much of what Apple is doing?

The fundamental problem, that the availability of third-party software is critical to the value of a computer, which leads to nearly everyone jumping on the bandwagon of the most popular machine, hence eliminating alternatives, doesn't seem to be solvable.

Of course, one other alternative survives in addition to the Macintosh. Linux.

When the Yggdrasil Linux CD became available at the end of 1992, the same year that Windows 3.1 came out, it became possible for ordinary people to actually try Linux for themselves.

Nowadays, of course, there are distributions in CDs on the covers of magazines, and it can be easily downloaded from the Internet. But back then, downloading a large operating system like Linux over a dial-up modem would not bear consideration.

Even more so than the Macintosh, however, Linux is too large a topic for me to adequately discuss here.

I have learned of another aspect of the history of computers that I have missed in these pages.

In 1992, the computer game Wolfenstein 3D was released for the IBM PC. Its graphics, making use of the new VGA graphics standard from IBM, were very impressive. DOOM, released on December 1993, took this even further.

The Amiga, and apparently also the Atari ST, stored images in its graphics hardware as multiple bit planes; the VGA graphics card for the IBM PC, however, in its 320 by 240 256-color more stored images as a series of bytes in memory, not as eight bit planes with a single bit corrresponding to a pixel in each.

This was better suited to the calculations needed to produce three-dimensional scenes with shading and lighting effects in a computer. As a result, Wolfenstein 3D and DOOM were never officially ported to either the Amiga or the Atari ST, although efforts were made to imitate those games on those platforms.

It wasn't until much later, in November of 1995, that 3dfx started manufacturing its first Voodoo Graphics chipset; initially, for just under a year, it was sold to the makers of coin-operated console video games; only with memory becoming available at lower prices did 3dfx enter into arrangements for third-party companies like Diamond, makers of the popular Sound Blaster card, to produce graphics accelerator boards for the home market.

These very early systems only had 3D graphics functionality, and couldn't handle text or 2D graphics, and so video cards based on this technology were pass-through cards; the VGA output of a regular VGA graphics card was sent to the graphics accelerator card, which could either pass it through, or replace it with its own output when the computer was being used to play a game.

Thus, while I noted above that the availability of cheap clones of the IBM PC, due to their wide selection of software, was the likely cause of the decline of the Amiga and the Atari ST, I missed a more specific factor, relating to the significance of computer games in the home computer market.

At left is pictured an Internet appliance, the Netpliance I-Opener, which was offered for sale at $99 in late 1999. This was less than its cost of manufacture, but this was expected to be recouped as the device could only be used with an Internet service provided by its makers for $21 a month.

This did not work out as planned; hackers found a way to transform the device into a full-fledged computer, and the company mismanaged how it responded to this, leading to problems with the Federal Trade Commission. Of course, though, it is not a unique practice; video game consoles that can only use their manufacturer's game cartridges, and even inkjet printers that can only use ink supplied by their manufacturer are also often sold at a price involving an initial loss.

Be that as it may, this device is noteworthy for having on its keyboard a key with the ultimate special function, long sought after by programmers burning the midnight oil in intense coding sessions.

This is not an April Fool's joke. It really did bear, on its keyboard, a "Pizza" key!!

Of course, the key did not cause a pizza simply to materialize, nor did it directly initiate the preparation or cooking of one from stocks in one's refrigerator. Instead, it did something that was entirely technically feasible: it caused the web page for Papa John's pizza to come up, that company having paid a sponsorship fee that made an additional contribution to defraying the low cost of the computer.

None the less, I am inclined to view this as a legendary moment (at least, of a sort) in the history of computing.

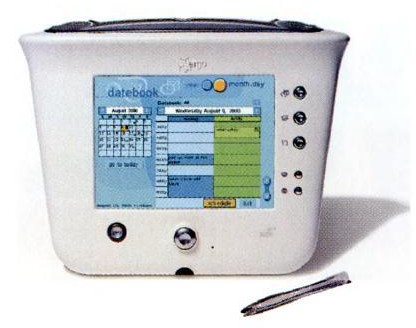

Another example of an Internet Appliance, but one that did not rely on subsidized pricing, was the Audrey from 2000 which initially sold for $599. This was sold by 3Com, best known for its line of telephone modems, and which also later acquired the Palm Pilot business. The unit later had a wireless keyboard available for it.

This unit was based on a National Semiconductor Geode processor. The Geode was a system on a chip with the x86 instruction set; the microprocessor portion was based on the Media GX processor from Cyrix. Eventually, this portion of National Semiconductor's business was sold to AMD. The Geode was also used in the One Laptop Per Child project.

I have heard that the name of the unit was chosen because of the famous and well-liked actress Audrey Hepburn, the gamine star of Breakfast at Tiffany's, which introduced the world to the song Moon River in a rendition quite unlike Andy Williams' later version; I think the choice of name was highly unfortunate, though, as when I first encountered it, what came immediately to my mind was the plant from The Little Shop of Horrors; however, it wasn't unfortunate naming, but the fact that you couldn't build an Internet Appliance for much less than a real PC that accounted for its lack of success in the market.

Incidentally, Audrey Hepburn is an actress who came to the United States from Britain, while Katharine Hepburn, famous for Bringing up Baby(1), The African Queen, and for being channeled by the actress Kate Mulgrew during her performance as Captain Kathryn Janeway in Star Trek: Voyager, was an American actress; although they both came by the surname Hepburn honestly(2), they were not related.

It was at the 2005 WWDC, which began on June 6, 2005, that Apple announced that it would switch from using the PowerPC chip in Macintosh computers to Intel chips with the x86 architecture. As both the 68000 and the PowerPC were big-endian, while the x86 is little-endian, this led to some concern about a need for changes to the formats of files containing data.

The eventful year of 2005 was also the one in which IBM withdrew from the personal computer business, selling their business in that area to Lenovo.

It was on November 10, 2020 that Apple announced the M1 processor, first in a line of chips based on the ARM architecture and designed by Apple itself, and the transition of the Macintosh from Intel processors to these new chips.

The history of the microcomputer up to this point has been mostly about individual microcomputers. The microchips within them, of course, played an important role as well, such as the Intel 8086, the Motorola 68000, and so on, but it was natural to discuss those chips in the context of the computer systems that used them.

While it would still be possible to continue a discussion of individual models of computer systems from Apple beyond this point, and some individual models from other makers are also of note, such as the Sony VAIO UX to be discussed on a later page, in general, a new chip would not be associated with any one particular computer system the way the 80286 was associated with the IBM Personal Computer AT in a world dominated by generic computers, built with motherboards designed to specifications supplied by Intel and AMD.

Thus, the history of the microcomputer continues on from this point as primarily a history of chips instead of a history of systems. Of course, particularly when one thinks of the experience faced by the user, it is also a history of successive releases of Microsoft Windows and other operating systems.

The IBM PC, from 1981, was mentioned above. It had a socket on the motherboard for the Intel 8087 floating-point coprocessor. The floating-point format that was ultimately embodied in the IEEE 754-1985 standard was, of course, originally devised by Intel for its 8087 co-processor for their 8086 microprocessor; the 8087 itself was announced in 1980.

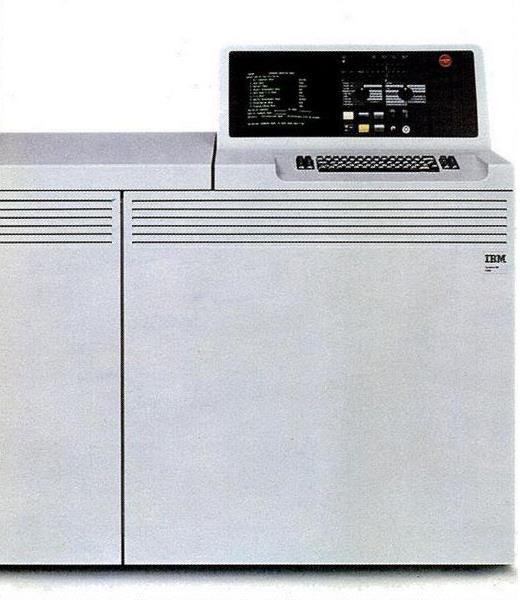

Since it looked like that format was going to become the standard, however, other manufacturers implemented it in their computers even before the 8087 came out, let alone before the IEEE-754 standard was finalized and officially adopted. One example of this was the IBM System/38 computer, announced on October 24, 1978, and pictured below.

The IBM System/38 was the predecessor of the IBM AS/400 line of computers, and IBM's current IBM i operating system for its POWER servers (formerly, it sold POWER servers designed for direct use of the POWER PC instruction set separately from those intended as "System i" machines).

It was based on the technology developed for IBM's Future Systems (FS) project. This project became known to the public in May 1973, as a consequence of disclosures in the Telex vs. IBM antitrust trial. Originally, it was expected that this project would replace the IBM System/370 and change the direction of the industry; notably, industry pundit James Martin gave seminars on how to plan for this large change.

IBM abandoned the plan to switch to FS in 1975, but continued developing one of the machines in that series, and this was what became the System/38.

The story of aids to computation, from its earliest beginnings, isn't the kind of story that would lead to conclusions; rather, it is a saga of human achievement.

But the story of the microcomputer, being a time of intense competition, with the rise and fall of many computer products and companies, would seem to be a testbed for what works and what doesn't. Are there conclusions to be drawn from that period?

In some ways, to me, the microcomputer era illustrated principles that were well-known from the mainframe era that preceded it. Home users, buying a computer for personal use, strange to relate, just like the managers who oversaw contracts for mainframe systems costing hundreds of thousands of dollars, sought to avoid vendor lock-in, to have an upgrade path, to have software availability. Joe Consumer was no fool, and where the same basic principles applied to small computers as to large ones, they were recognized.

Of course, though, while there were some basic commonalities, there were also huge differences between the two markets.

And there were major transitions during the microcomputer era.

There was the technical shift from 8-bit computers to computers that were 16 bits and larger. There were two instances where an upgrade path of a sort was offered across this huge technical gulf: the IBM PC, where the 16-bit 8086 architecture had so much in common with the previous popular 8080 of the 8-bit era that many applications from CP/M were ported to the IBM PC, and the Apple IIgs, which used the WDC 65C816 chip, allowing a new 16-bit mode to be added to the 8-bit 6502-based Apple computer.

There was a gradual shift from computers that came with a BASIC interpreter, for which users wrote a lot of their own programs, to computers that mostly relied on the use of purchased software; this shift was then made complete by the transition to computers with a GUI, like the Macintosh, or to using Microsoft Windows on the PC platform.

From my point of view, one of the saddest things that happened was when the 68000 architecture was abandoned. At the time, it was thought that it would be easier to keep up with the technical development of the successors of the 8086 if one could design around a simple RISC design, as the PowerPC was, instead of the 68000, which, like the 8086, was CISC. But this broke software compatibility, and so while the Macintosh survived the transition, the Atari ST and the Amiga did not.

And the PowerPC ended up as only one RISC architecture among many; unlike the 68000, which for a time was the one obvious alternative to the 8086.

So there is no longer a real battle for the desktop; only AMD can compete against Intel - at least, as far as most of us are concerned. Now that Apple has abandoned the Intel Mac for Apple Silicon, based on the ARM architecture, indeed, there is another ISA in use on the desktop, but the Macintosh is a product with a premium price. ARM chips are available from other suppliers... for use in smartphones. While Apple has proven that the ARM architecture is also suitable for more heavyweight CPUs, there is no third-party off-the-shelf supplier of that kind of ARM chip because there aren't really any prospective purchasers of such a chip out there.

The SONY PlayStation 3 video game used the CELL processor, which included a main CPU with the PowerPC architecture, and so video games are an obvious potential market for a powerful processor with an alternative ISA.

Lessons can be learned from the individual stories of computers that succeeded for a time, compared to those that failed quickly. Several computers that were designed to give the computer maker the additional profits from controlling the market for software for those computers failed, because that reduced the value of those computers to their purchasers.

The IBM PS/2, which offered no real benefit for transitioning away from an existing standard to one that was more proprietary, did not work out well for IBM, even if it did lead to the transition of the PC in general to the 3.5" floppy and to a smaller keyboard connector and the use of a similar connector for the mouse.

The Macintosh and the HP 9845, each in their own way, showed that a product certainly could be a success on the basis of sheer innovation. And the Commodore 64 demonstrated that offering a reasonably good product at an excellent price was still a formula for success as well. But the biggest success, that of what is often termed the "Wintel platform", taught the most dramatic lesson: the importance of having the value of a computer multiplied by a large pool of available software.

Since tablets and smartphones, unlike desktop personal computers, were a new market that initially wasn't saturated, that was where the excitement was, with many pundits viewing it as foolish to expect any more real change or progress in the desktop PC except for the gradual improvement coming from technical progress. There's too much software for Windows for any new computer to come along and generate interest, so the war for the desktop is over.

While that may not necessarily be certain, I definitely have to admit that I know of no obvious way for anyone to start a business making a new, incompatible, desktop personal computer that can somehow overcome that obstacle. The one thing that might have some hope of success would be to address a niche market with something well suited to it.

When I recently added a mention of the HP 9845 computer to this site, since some referred to it as the first workstation computer, I asked myself if I had overlooked any other computers that were very influential.

But which computers were the most influential?

During the microcomputer era, the most obvious computers in that category would be the IBM PC, for setting the standards still followed today, and the Macintosh, for establishing the importance of the GUI.

Prior to the microcomputer era, the most influential computers would appear to have been the IBM 704, the IBM System/360, and the DEC PDP-11.

Some other computers can be identified as runners-up as well.

The IBM 305 RAMAC introduced the hard disk drive.

The PDP-8 established that there were a great many individuals who would want to purchase a computer if it were at all possible, which meant that later, when it became possible to make a computer like the Altair 8800, it was realized there would be a market for it; and, with DECtape, showed that even a lesser substitute for a hard disk drive as a random-access storage device would be useful and desirable.

The floppy disk drive was invented by IBM to load the System/370 Model 135 and Model 145 with microcode whenever they were turned on, prior to booting up (Initial Program Load, or IPL). System/370 was a revision of the System/360 that was announced on June 30, 1970; Model 155 and 165 were included in that initial announcement. Model 145 was announced on September 23, 1970, and model 135 was announced on March 8, 1971.

The Altair 8800 launched the microcomputer revolution. Microsoft BASIC was developed for it, and when a floppy disk was offered for the Altair 8800 as a peripheral, CP/M was developed for it by Digital Research as well. As Microsoft MS-DOS was based closely on CP/M, this meant that the Altair 8800 had a very big influence on the later IBM PC.

And CP/M, in turn, was very similar to OS/8, an operating system for larger PDP-8 systems that included a hard disk drive.

So the most influential computer systems were well-known, as one might expect, rather than obscure or forgotten.

(1) As far as I know, Bringing up Baby might actually have been one of Katharine Hepburn's lesser-known movies. However, it sticks in my mind because of one personal incident I experienced. While riding a bus going between Edmonton and Calgary, early in the morning the bus driver came on the intercom to announce that he was compelled by corporate orders to present a movie on the en-route television screens with which the bus was equipped, and thus would be unable to accede to requests to cease and desist.

The movie was Bringing up Baby, on a video tape supplied by Turner Classic Movies. Understandable protests from sleepy passengers demanding that the movie be shut off had no result, just as we had been warned they would. But then I modestly enquired of the driver, if he could not stop the movie, would he at least be permitted to adjust its volume down to a more reasonable level - and, indeed, he then did so.

I steadfastly refuse to consider this incident as convincing evidence that I am some sort of super-intelligent being, however.

(2) While the British actress born Audrey Reston did not change her surname to Hepburn at the urging of some Hollywood mogul, her father had changed his surname from Reston to Hepburn-Reston in the mistaken belief he had unearthed an ancestral connection to an aristocratic Hepburn family in Britain; thus, this became her surname as well, then shortened for American audiences. So while she personally came by her surname honestly, it is still not the surname associated with her bloodline from time immemorial, hence a footnote was in order.